NEWS, EDITORIALS, REFERENCE

Hidden Files

The news is that C64 OS version 1.06 has just been released, March 22, 2024. I'm happy to get that out the door, as it frees up my mind to start working on important new cornerstone technologies coming to C64 OS. I'll start dropping hints about that in the coming weeks.

Version 1.06 is a more modest release than 1.05 or 1.04. But I think that's okay. v1.06 includes one new Application, three new Utilities and new features and improvements to several existing Apps and Utilities, and even some new low-level features in the KERNAL and libraries.

This latest release makes use of a combination of all of the above to provide a handy new feature for users and a potentially powerful and useful feature for developers, when put to creative uses at a low-level. Discussions of just this nature have already been spurred on in the developer forums on the C64 OS Discord server.

That feature is: Hidden Files. (But you knew that already from the title of this post.)

Let me give a quick walk through of how hidden files work in C64 OS, some of the pit falls, some of the advantages, etc.

If you haven't yet, go grab the free v1.06 update here.

Hey, Commodore 64 user,

C64 OS v1.04 is now available! It keeps getting better.

You've come here to read one of my high-quality, long-form, weblog posts. Thank you for your interest, time and input. It would be a lonely world without loyal and friendly readers like you.

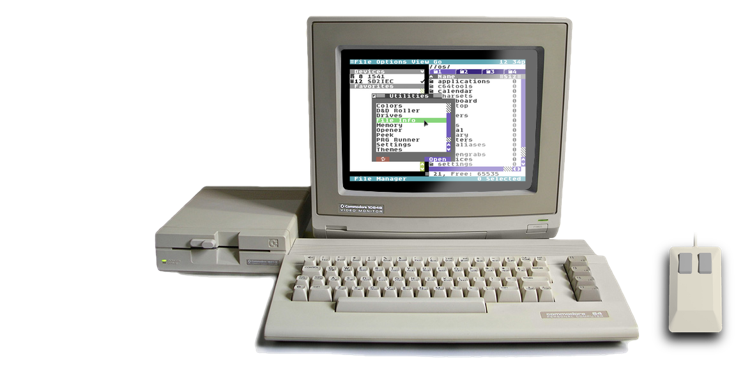

C64 OS is off to a great start with the successful v1.0 launch in 2022.

I created C64 OS because I wanted the C64 to be more capable, powerful and useful. In these blog posts I document my thoughts, plans and progress along the way, because I want others to share in the journey of learning, planning, designing and coding for our favorite home computer.

The best way to contribute and to help ensure continued development is to order a copy for yourself of latest release, C64 OS v1.04. The printed User's Guide is longer with improved layout and typography. The v1.04 Standard Bundle now comes with a pre-built IDE64 disk image. Plus, all the updates, improvements, bug fixes, and new features from the first four software updates have been rolled together and come pre-installed on the System Card.

Join the growing community of C64 OS users and get more out of your Commodore 64 and its many hardware expansions.

Gregory Naçu — OpCoders Inc.

Get your copy of C64 OS today.

Learn More Order NowEliza for C64 OS

I'm super busy, so I'm going to have to keep this blog post short. First a couple of updates. I had a great time at World of Commodore in December 2023. I had a nice vendor table there, I think it was the nicest I've ever put together.

The top image is how I imagined it, when I set it up on my basement floor. The bottom is how it ended up on a table at the show. I had three machines set up, each with a quite different collection of hardware, but each showing C64 OS v1.05 running and available for live demo.

There's an Ulimate64 with a cableless SD2IEC and a 1581 connected to a Commodore 1702 monitor, with a MouSTer and black wireless wheel mouse. This was the machine I used to give my presentation. There is also the C64 Luggable, which is a C64 Reloaded MK2 with a UltimateII+, MicroMys 5 and wired wheel mouse. And squeezed in the middle is TheC64 mini, mounted to the underside of a tiny black HDMI monitor on a stand I built for it. It's running VICE with C64 OS installed on a virtual IDE64. It's got a wireless keyboard and a TheMouse, USB tank mouse.

Vendor table at WoC2023.

The presentation went well, in my opinion. People *gasped* when I ended the presentation with the 3D teapot. I also demoed a variety of other new technologies that were added to C64 OS throughout 2023; disk image mounting in File Manager, mouse wheel support in Toolkit, Fast App Switching with REU and Fast Reboot, custom boot modes and bitmap boot screens, and of course the new animations and 3D matrix images in Image Viewer.

The presentation was recorded, both myself and the screen, and I am waiting for TPUG to post the video to their YouTube channel, so I can post it here and on social media.

I'm now working on the next thing for C64 OS. I'm working on a multi-line text area Toolkit control. I've got it mostly working, still a few bugs to work out. I'm excited by its addition because each new class opens the door for new opportunities to create software for the C64 with ease.

This video shows it crammed at the bottom of the Today Utility and without a scroll bar, etc. This is just an early test build that I was excited to share.

Some other updates include: I finished and published the Advanced set up for IDE64 section of the C64 OS User's Guide's Chapter 2: Installation. And I split up the Software Updates page which used to have three sections, into three separate pages:

But, following World of Commodore, and in time for Christmas morning, I dropped a little surprise. I released an Eliza Application for C64 OS! And that's what the rest of this post is about.

Fast App Switching

Hi everyone. I know it's been a while since I've written a blog post, but I have been super busy working on new C64 OS code and documentation.

A quick update on the documentation. I've been working on the Programmer's Guide, where I have completed Chapter 4: Using the KERNAL. It is an overview and discussion about how to use the C64 OS KERNAL, plus a reference-style breakdown of all 114 KERNAL routines divided into 10 modules: Memory, Input, String, Math, Screen, Menu, Service, File, Toolkit and Timers.

I've also been working on Chapter 7: Writing an Application. It's not quite ready to publish yet, but it is currently sitting around 35,000 words, and is a deep technical walkthrough of everything from stem-to-stern that goes into writing the TestGround Application that ships with C64 OS. TestGround's source code is opened in the process of writing this chapter, and it covers everything from creating an Application Bundle, to making its menu data file, creating an icon and about metadata file for your App, implementing a user interface in Toolkit, creating custom a class with custom draw routines, responding to messages, handling menu commands, loading data files and loading custom classes.

I have also been updating the online C64 OS User's Guide with new material from C64 OS v1.03, v1.04 and the brand new v1.05. I've added new documentation for the simplified VICE set up instructions for CMD HD and a new sub-chapter for set up with IDE64. And a new and updated document for advanced VICE configuration with CMD HD. Advanced VICE configuration for IDE64 and set up guide for C64 OS on TheC64 mini/maxi are on their way.

I decided early on when I was working on the C64 OS User's Guide that I would draw a dividing line between "core" OS features and the Applications, Utilities and other features that are provided as add-ons. One reason for this division is because, from my experience with working on other OSes for the Commodore 64, I have found that in the minds of many people all things that depend on the OS get rolled into one giant mega-project. And then cool new things get dismissed as, "Oh, it's just a new feature of that C64 OS project. Neat." I want to fight against this impulse. C64 OS is an application platform, and therefore Applications written for C64 OS should stand alone as their own productions, which, incidently require C64 OS to be run.

Image File Formats

The free software update v1.04 of C64 OS was released last month, June 2023. I like to give titles to the releases that capture the most important features of the release. I decided to call 1.04 the Multimedia Release.

The reason for calling it a Multimedia release is because it has 4 main changes related to graphics, animation and sound. Let me briefly touch on these 4 changes, and then we'll hop into the main point of this weblog post, which is to discuss Commodore 64 image file formats, including two new ones.

1) New video modes in splitscreen and fullscreen

From v1.0, C64 OS supports an adjustable raster-split which is referred to as splitscreen mode. It's built into the OS at the KERNAL level, with some help from a shared library called gfx.lib. The only reason for the gfx.lib is because if any Application doesn't need to use splitscreen mode, it's nice for it to reclaim some of that memory, and so some of the code that implements splitscreen which was originally in the KERNAL during the pre-release betas got moved to a shared library that could be loaded by those Applications that need it.

In addition to the splitscreen mode, there is also a fullscreen graphics mode. The two features are essentially the same thing; any Application that loads the gfx.lib and provides it with the pointers and configuration for the graphics data automatically gains support for both fullscreen and splitscreen modes.

Starting in v1.04, the gfx.lib and KERNAL have been updated to support all native VIC-II video modes. Prior to this update, only HiRes bitmap and MultiColor bitmap modes were available in split- and fullscreen graphics. Now, C64 OS supports HiRes character mode, MultiColor character mode, and Extended Background Color character mode, and each supports a custom character set. This opens the door for viewing PETSCII graphics which are very popular these days. Also most games are in a character mode with a custom tileset/characterset and this allows us either to view screen grabs of those games, but also makes it possible to implement games for C64 OS itself using those modes.

Mounting and Mouse Wheel

Mounting and mouse wheel? What the heck have those two things got to do with each other? Well, they're two new features available in the most recent update to C64 OS, version 1.03.

If you purchased a copy of C64 OS, either the Starter Bundle or the Standard Bundle, then you are a version 1.X licensed user. Congratulations. That entitles you to patches, bug fixes and updates with new features. If you didn't know that you get access to new features and updates, you may be missing out on cool new things being added to C64 OS.

You can download, for free, C64 OS system updates and other independent releases from the Official Software Updates page. These updates can be installed overtop of an existing C64 OS installation to update it to a newer version. This is all handled by the "Installer" Utility which was included as part of version 1.0. There are a few things you need to know before installing an update, such as updating your current system in steps. If you're currently on 1.0, you need to update to 1.01 first. Once you're on 1.01 you can update to 1.02, and from there to 1.03, and so on.

Everything you need to know is in a special guide called, C64 Archiver and Installer. And some of that information is repeated in condensed form at the top of the software updates page itself. In this blog post I'm going to talk about two new features in v1.03: Mouse Wheel Support, and Disk Image mounting in File Manager.

Gaps in Software IEC

Happy New Year Commodore 64 lovers, welcome to 2023.

C64 OS is selling well. I couldn't be happier about it. I'm currently restocking for the 4th stock run and hope to be back ready to take orders again around the end of January.

Lots of stuff is going on. We're just doing some beta testing on the v1.02 and v1.03 updates to make sure these work without any issues, and then they'll be released for download from the Software Updates page.

v1.02 is very minor; It fixes a bug in the Installer Utility which is important for making sure that subsequent updates install properly. At the same time v1.03 is being released with several new features I've been working on. More details on this, when it's released, will come in another weblog post.

I thought I'd share these pictures; A day in the life of an OpCoders Inc. grunt worker, assembling C64 OS bundles and making them ready for shipment.

OpCoders Inc. assembly factory.Many people have asked me if it is possible to use C64 OS with a 1541 Ultimate II+ or Ultimate64. This usually comes in the form of a question, "Is C64 OS compatible with the 1541 Ultimate II+ or Ultimate 64?"

This is a tough question to answer, because these devices are not just one thing with one feature. The Ultimate64 is a complete modern C64 replacement, so, can C64 OS be used on an Ultimate64? Of course! My last two World of Commodore demos have been delivered using an Ultimate64, along with both of my demos for Commodore Users Europe.

And a 1541 Ultimate II+ (its functionality is also built into Ultimate64) can be so many things: a 17xx REU, a GEORAM, a multi-SID chip emulator, a KERNAL ROM replacement, a speed loader cartridge, a source for Realtime Clock, an Ultimate Audio Module (for digital audio) and much more. So, is C64 OS compatible with a 1541 Ultimate II+? Of course! You can definitely plug a 1541UII+ into your C64 and also use C64 OS, and C64 OS can benefit from and use many of these features.

But that's not really what most people are asking. What they really want to know is, can C64 OS be installed on and booted directly from a 1541UII+ or Ultimate64? The short answer is, No. The longer answer is because these devices primarily provide a highly compatible modern implementation of a pair of 1541 disk drives (or now, 1571 or 1581 disk drives), which backend on disk image files rather than using physical floppy disk media. C64 OS cannot be installed on 1541, 1571 or 1581 disks because A) they are too low capacity, and B) they don't support subdirectories. All of this is outlined in some detail in the User's Guide, Chapter 2: Installation and in the Appendix: FAQ.

Inevitably, the curious person then asks me, "what about installing it on Software IEC?" and the explanation being, "because it supports subdirectories and access to the native USB device's file system." This is the point where it starts to become difficult to explain to people, in a nutshell, why Software IEC (at this point in time) is not sufficiently compatible with the other supported device families to be able to run C64 OS. The other device families are: CMD HD, RamLink, IDE64 and SD2IEC. All of which are remarkably DOS compatible with one another.

C64 OS v1.0 release

To all C64 fans and most especially to the people who had gotten used to reading my blog posts and getting news and updates on my progress, it is an exciting time.

C64 OS version 1.0 has been released and is available for order.

Although it seemed quiet, on the blog, here at C64os.com for 'lo these many months with only a brief update back in May on what's going on, I've had the petal to the metal. For those who follow me on Twitter I was still giving updates there about things that were brewing. And then in June, exactly one year after the June 2021 presentation that I gave for Commodore Users Europe, I had the privilege of giving another demo.

The 2021 demo video has, as of this writing, had 32,000 views and the more recent 2022 demo video has had 6,700 views. By some margin, the C64 OS demos have been the most popular videos on the Commodore User's Europe channel so far. Some of those views were helped by C64 OS getting featured on Hacker News and Hackaday. It is super exciting when this happens because I get to see neat little blips like this in my analytics.

A blip in the analytics when featured on Hacker News, or elsewhere.

The fun doesn't last forever and levels soon return to normal, usually the new normal becomes a bit higher, but it's neat to see. The only problem is that throughout this year, it was a shame whenever anyone or anything directed to my site, knowing that I was hard at work on a big update to the site that I hadn't yet revealed.

I was very pleased to be able to finally reveal the site updates I'd been working on offline. Many changes have been made to the site, most in preparation for the C64 OS release. And finally they both got unveiled together.

It's time to get back on the horse of regular blogging, and that starts now!

Updates on C64 OS, Beta 0.8 and 0.9

Hello folks and welcome back to another blog post on C64 OS. I've got so much to talk about, and no time to talk about it.

How about the elephant in the room first? It's May of 2022, and I haven't made a blog post since December of 2021. What's more, I said the next blog posted would be the follow-up part 3 of my exposé of VIC-II timing for an FLI routine I disected, commented and figured out from Codebase64. But this blog post is not that post. Has C64 OS development stalled? What happened, where am I at? Why the silence?

The good news is that, it's basically all good news! I have been developing like a cross over between a cave troll and a 6502 code producing machine, that's powered by coffee beans. And whenever I'm not coding, I've been discussing things with my beta testers, designing a new logo, overhauling the /c64os/ subsite of c64os.com, and writing the online version of the C64 OS User's Guide. The only bad news is, I simply haven't had time to write the lengthy and detailed blog posts that I like to write. What's more, the last blog post I was working on, that is only maybe 25% written, is a long, detailed, technical piece that requires a lot of work. And every time I sat down to think about working on it, I realized that I've got a mountain of work in front of me to get C64 OS ready...

So this blog post—I don't know how long it'll end up being—is here to give you an update on all the stuff I've been working on, to make some announcements and to show off some things that are coming.

Text Rendering and MText

I wrote the first two posts in a 3-part series about the VIC-II and FLI Timing (Part I and Part II,) but I have a lot to say about things I'm working on presently, and I don't want to miss the moment to talk about them while they're fresh in my mind. We'll return to Part III of the VIC-II and FLI Timing after this.

In mid-2019 I wrote a post called Webservices: HTML and Image Conversion. I have since revisited the image conversion part in the post, Image Search and Conversion Service, but have not yet returned to the idea of a simple marked-text format, which I refer to as MText.

In the meantime, I have implemented the Toolkit classes that are always memory resident:

- TKObj

- TKView

- TKScroll

- TKSbar

- TKSplit

- TKTabs

- TKLabel

- TKCtrl

- TKButton

Plus several more that are runtime loadable, relocated in memory and dynamically linked to their superclass:

- TKTable

- TKTCols

- TKInput

- TKPlaces

- TKFileMeta

- TKPathBar

- TKInfoBar

All these classes and the whole Toolkit system are now quite mature—many bugs have been found and squashed, and several optimizations have been made—and are in productive use in the File Manager and across numerous Utilities.

Most recently I have been tackling the TKText class. It is for displaying (but not editing) multi-line text. It has numerous features that we'll get into in some detail in this post.

I stumbled upon a Stack Overflow question about how to optimize for making text selections in a custom text rendering view. The consensus opinion is that implementing a custom text rendering class is "obscenely difficult." That's why operating systems provide classes to do that work for you. And that's what TKText is all about.

The reason for an OS to provide a class is twofold:

First, so you don't have to do the technical heavy lifting yourself. You can just instantiate the class, call some of its methods and—BOOM—your application has rich text handling.

Second, text handling is tricky and nuanced. If every application implemented its own solution, the quality of the experience would vary substantially between applications. (Just as it does between two Commodore 64 programs chosen at random.)

By providing a class that handles it for you, lazy programmers (lazy-good, not lazy-bad) will rely on the provided class and the users benefit from a more consistent experience. Now let's get into what TKText provides and some of the difficulties implementing it.

VIC-II and FLI Timing (2/3)

This post is part 2 of a 3 part series. If you haven't read Part 1: Memory Access yet, I'd recommend starting there first.

I like to start with a few updates on the progress of C64 OS. If you're not interested in the updates, you can skip to the the main topic.

Update on C64 OS

TKInput Class, File Lock Library, and File Info Utility

I've recently finished the TKInput class, which is a single line text input field. It supports most of what a modern text field supports. Selections, cut/copy and paste to and from the clipboard. Content length constraints, blur, focus and character insert events, ability to filter or substitute typed or pasted characters. Good keyboard controls. I've also recently finished the File Info Utility, which makes use of the TKInput class for editing a file's name.

C64 OS applications use a standard pointer to a file reference for the currently open or selected file. The system's status bar has a mode that automatically renders this file reference. Now, the File Info Utility also taps into this file reference and allows you to rename the file, back it up, or see its metadata such as size on disk, its last modified date/time, and its file type.

Any application that uses the standard open file reference automatically integrates with and benefits from the features provided by the File Info Utility.

File LockThe directory library in C64 OS now has the ability to optionally read in the date-time stamps on files from CMD devs, SD2IEC and IDE64. And the date/time is now shown in the File Info Utility. #c64 pic.twitter.com/E7hADKBhAr

— Gregorio Naçu (@gregnacu) October 20, 2021

The File Info Utility also lets you lock or unlock the file, by making use of a new File Lock library (flock.lib). The File Lock library toggles the lock bit on any file specified by a C64 OS file reference. The library supports the 1541, 1571, 1581, IDE64, CMD HD, FD and RamLink. The only devices not supported are SD2IEC, (because they don't support file locking.)

The File Info Utility can be used in any application. For example, in C64 OS's App Launcher, the selected alias can be edited with the File Info Utility. Locking an alias prevents the alias from being deleted from one of App Launcher's desktops. Very cool.

File RenameThe File Info Utility's main purpose is to rename files. It uses the TKInput class which gives full support for selections and the clipboard, so you can copy and paste to edit filenames.

If the new filename is taken, a disk error is generated. The error gets rendered in the status bar and the original filename is restored. An alert is generated (which blinks the border) to notify you of the error. You can also use C=+Z to revert the filename field to the current name of the file, undoing any edits you have made.

Above are previews of the 10 most recent posts.

Below are titles of the 5 next most recent posts.