NEWS, EDITORIALS, REFERENCE

Sorting Algorithms: QuickSort6502

Happy Easter 6502 and C64 fans! Here's some long-weekend reading, going into more detail about sorting algorithms on the C64 than you probably ever thought you wanted to know. Enjoy!

C64s are interesting computers. They have lots of character. But it's hard to put your finger on exactly why that is sometimes. What is it that endears us to this humble little machine, all these years after its commercial decline in the late 80's early 90's? My guess is that it is a confluence of artsy factors that aren't replicated on other platforms, despite their technical superiority.

Let me give you an example.

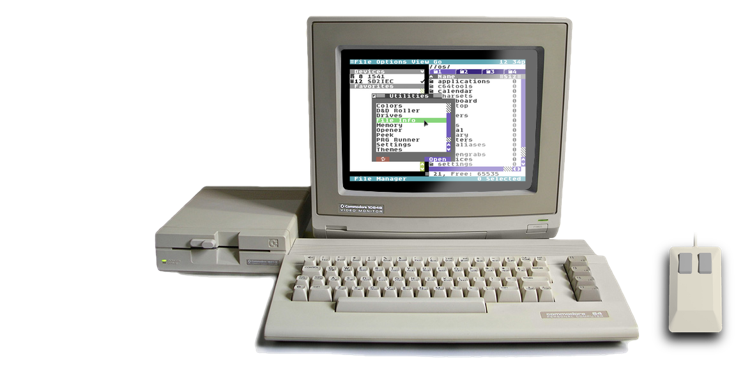

Sourced from this Twitter Moment

The above is a directory listing, probably from a 1541 disk, but could also be from any other drive that supports the standard structure. And that is just amazing! It's so artistic. It's so novel and fun, and makes the C64 artsy and nonconformant. But how is this sort of thing even possible? Why don't we see this sort of thing on other platforms?

The directory art trick, to take this one example of what's fun about a C64, is enabled by three general factors. The first is PETSCII itself. While it's missing a bunch of important business characters from ASCII, it has a brilliant set of graphical symbols that makes it possible to draw clever and complex text-based images. Check out this older post of mine, Why PETSCII Anyway?

The next factor is the simple and unvalidated structure of the directory blocks on the disk. The structure is so simple that it can easily be understood from a description in a book. And numerous tools and utilities have popped over the years that allow direct editing of the disk sectors. This allows one to make a filename have odd properties, such as a quote mid file that allows the file to be loaded by its first two characters, but still spits out the remaining characters when loading a directory. Those remaining characters can be PETSCII'd to the max, in fulfillment of your artistic desires. Or, marking files as deleted, even though they still remain in the directory. These delightful hacks are possible due to the simplicity of the structure, but also because the code that outputs it does so quite blindly and doesn't care if it deviates from a more stringent norm.

But, for the purposes of this post today, it is the third factor which is the most relevant. Directory listings on a C64 are unsorted.

This clearly is a side effect of the fact that the computer itself doesn't know anything about "files", or "directories." You can read a rather indepth discussion of this in this post, KERNAL, File Refs and Services. When a "$" (or $ plus some trailing pattern) is loaded from the disk, the DOS inside the drive reads through the directory blocks, starting at the first one, moving down the linked chain, and spitting out matching file names as it encounters them, in the order that it encounters them. Typically a C64 is not capturing this output, but just dumping it to the screen for the user to see. Holding the Control key will slow the process down (or pause it if you've got JiffyDOS.)

There is clearly no opportunity to sort the directory. In fact, the directory entries are never even stored in memory all at the same time. The drive is formatting them as BASIC lines, and sending them over the IEC bus in realtime as it finds them on the disk. The C64 is outputting the lines to the screen. But after 25 filenames have been printed out, the topmost ones keep getting pushed off the top of the screen, the screen rows are shifted upwards and out of memory altogether, as the new ones appear on the bottom.

There is something charmingly simple, naively direct, about how it all works. Nearly the smallest amount of code imaginable is running to move the magnetically charged bits from the disk into the computer and out onto the screen. And one cool advantage of this simplicity, is that the filenames always come out in a predictable order. Give each filename some well designed PETSCII love, and you've got yourself a funky art display in the directory of your game or demo before the user even tries to load in any of your programs.

But what if unsorted is not what you want?

The above is fun and clever, for games and demos. But there is a good reason why modern computers don't spit out their directories this way. What if what you really want to do is navigate around the file system of a harddrive or SD Card Reader hooked up to your C64? What if you're constantly looking for the next specific thing? A directory name, another directory name, then a specific file by its name? If you've used a modern computer for any sort of work, you're familiar with this behavior. In a directory that is sorted arbitrarily, rather than alphabetically, you would have to scanned the directory contents with your eyes, one filename at a time. It would be far more efficient if you could opt to sort the files according to the criteria you care about.

There are several problems that need to be solved in order to make this possible.

Before you can sort a directory, you first have to load all of the directory entries into memory. In an ordinary C64 program, with only the KERNAL ROM to lean on, this is highly problematic, because the KERNAL doesn't offer any memory management. You have no idea how big a directory listing is going to be until you start loading it in. And you have no idea where some free memory is into which you can start loading that directory when your main program has already been running and doing its thing for some time.

To solve this first problem, of course, C64 OS provides a memory manager. Loading directories into memory was in fact one of the example use cases I gave when arguing for why a memory manager is even necessary in a single-tasking operating system. You can read that full post here, A Few Thoughts About Memory Management. It's an older post, but it's still bang on with the aims of C64 OS. Here's the relevant excert. (If it isn't too immodest of me to quote myself):

Here's a more practical example of arbitrary length data. Loading a directory from disk, when there is already a program resident in memory. Before you load that directory in, you have no idea how big it's going to be. Mabe it has 5 files, maybe it has 144 files. You don't know. Where do you find the space to store an arbitrarily large amount of data? Well, most C64 programmers haven't bothered. Gregory Naçu, C64OS.com, 2016

Okay, so we have a memory manager. Let's say we use it to load in the complete contents of a directory. As we load from the directory, we fill the data into structures, and we make an array of pointers such that each pointer points to a directory entry struct. The next problem to solve is, how do you actually sort the directory entries?

Well, let's start with what you don't do. You don't sort the actual data structures themselves. These, by my rough calculation, will probably end up being 32 bytes each.1 Sorting, by its very nature, no matter what the algorithm, always involves swapping the positions of entries. We certainly do not want to swap 32 byte entries. Instead, we have our array of 16-bit pointers. Our sorting will only ever need to swap these 2-byte values.

About algorithms

For a computer to sort anything, it needs to have a sorting algorithm. An algorithm is a precise order of steps or operations to be performed. When the steps of a sort algoritm are carried out, in the right order, an arbitrary but finite number of times, the eventual outcome is that elements will be sorted. Since we're in a nerdy mood, the Wikipedia article: Algorithm, is pretty interesting.

There are many different sorting algorithms that have been invented through the decades of computer science. Like: Merge sort, Heap sort, Insertion sort, Quick sort, Bubble sort, and so on. With so many algorithms that all ultimately solve the same problem, they sort the elements, what is the difference between them, and how should we choose which one to use? Here's a chart I whipped up to visualize some ideas.

I realize this is pretty generic, but it gets to the point. Not all algorithms are created equally. And not all perform the same under the same set of circumstances. But let's just look at this chart for a second.

Along the bottom axis, we've got speed. All other things being equal, everyone wants the process to be fast rather than slow. When it comes to sorting, for example, no one wants to sit around while the list gets sorted. Ideally, no matter how long the list of items or how initially unsorted it is, we want the process of sorting to be instantaneous.

Along the left axis, we've got requirements. I've intentionally kept this term vague, because it is multivariate, but all rolled into one axis. It could be how much additional memory is required, or how long and complex the code to implement it has to be. Ideally, it takes zero additional memory, can be executed on a simple processor, with only a couple of lines of code.

The red circle at the top left is an objectively bad algorithm. And the irony is that it's pretty easy to come with these, because there are an infinite number of variations on a bad idea that will make it worse. Here I'll come up with a one off the top of my head. Just rearrange the elements at random, then scan through the whole list comparing one item to the next item. If the next item ever compares smaller than the current item, return to the top to randomly sort the whole list again. Eventually, this will sort the items. But it's a pretty bad idea. And it would probably take a long time. Depending on the number of items to sort, finding the solution could take millions or billions of years. Oops. That's not a good idea.

In the opposite corner, the blue circle bottom right is the idealistically best solution. It is objectively good. It has low requirements, and solves the problem quickly. But these kinds of solutions are really hard to find, because they are few and far between. And it's really hard to take a good solution, and then change it in such a way that makes it better.

What's more likely, for any given problem, is that the various solutions will fall along the slope of tradeoffs. Some conceptually simple algorithms will be kinda slow, but they'll get the job done. And as you're able to do more complicated computational manipulations, or use more complex organizational relationships, or use more memory, algorithms that can solve the problem faster become available.

A comparison of sort algorithms

There is a big difference between a computer programmer and a computer scientist.

Computer science is an academic field. It's a little like studying mathematics. It's studying and researching algorithms, data structures, and similar.

Computer Programmers write programs; the term tends to be used to describe people in industry, although of course computer scientists write programs too. Steve Cooper, Stack Overflow, 2009

As a mere computer programmer, fortunately, I can lean on the historical and ongoing work of computer scientists who actually discover and develop the algorithms we make use of.

Out of all the sorting algorithms to talk about, let's just look at 5. Why these five? Because the helpful folks at Zutopedia have made these brilliant YouTube videos that visualize, compare and analyze the Bubble, Quick, Merge, Heap and Insertion sort algorithms. Watch these four videos, they're fun and they make the algorithms highly accessible.

1) Bubble sort vs. Quick sort2) Merge sort vs. Quick sort

3) Heap sort vs Merge sort

4) Insertion sort vs Bubble sort

Okay, I hope you enjoyed those videos as much as I did. All of the visualizations were very helpful for me, and the concluding analysis at the end of the fourth video was particularly informative. I have understood the concept of Bubble sort for a long time. And I've known that it's slow, but exactly why it is so slow has not been clear until recently.

When I think about my simple four quadrant chart of tradeoffs above, Bubble sort falls squarely into the bottom left corner. It's really easy to understand, it doesn't use any extra memory, it's super easy to implement, but it's slow. And it gets progressively slower the more elements there are to sort in total.

But Bubble sort is really conceptually easy. Here's how it looks in pseudo code.

loop:

notInOrder = false

for index = 0 to total-2 {

if list[index] > list[index+1] then {

notInOrder = true

swap list[index],list[index+1]

}

}

if notInOrder goto loop

That's it! That's the whole damn thing. Just two nested loops. No recursion. If you ever are required to do a swap in the inner loop, you set one little variable to inform the outer loop that it should make another pass. Its requirements are extraordinarily low.

But it's slow. How slow is it? This screenshot from the analysis in the fourth video shows it best.

These graphs show Bubble sort, Insertion sort, and Quick sort, with just under 100 items each. The efficiency formulas below give you numbers that can be compared with one another to give their relative speeds.

For Bubble sort, if n = 100 (100 items to be sorted), that's (100 ^ 2) / 2, or 5000 compares.

For Insertion sort, if n = 100, that's (100 ^ 2) / 4, or just 2500 compares.

Insertion sort is twice as fast as Bubble sort. But it's only marginally more difficult to understand and implement, and it uses no more memory than a Bubble sort. It seems hard to argue that Insertion sort is not objectively better than Bubble sort.

For Quick sort, though, if n = 100, that's 100 x log(100), or a mere 200 compares! It seems that Quick sort has earned its name. It is however somewhat more difficult to implement, and quite a bit more difficult to understand. It has other practical problems too. It does not use any extra memory, as the elements are swapped in place. However, because it is a recursive algorithm it requires stack space. The C64 has a very limited stack space, owing to the 6510's small 8-bit stack pointer. Just 256 bytes of stack are available total, but in practice a bit less because some number will already be in use by the system and application.

In the second video, it is Merge sort that is compared with Quick sort. The results are less clear. In the first case Quick sort wins by a small margin, but that's because Quick sort can have a range of performances depending on the initial conditions, and a random factor of which pivot it chooses, a bit more on this later.

For my purposes, implementing a sort routine for C64 OS, while Merge sort sometimes beats Quick sort, Merge sort has two disadvantages that push it slightly up the tradeoff slope from Quick sort. It can be faster, but it has higher requirements. Merge sort is recursive like Quick sort, so it could run out of stack space, but it has two additional problems. It requires much more memory, because it has to move the elements being sorted into a temporary space, rather than swapping elements in place. It also seems, to me at least, somewhat more cognitively complex.

In the third video, the fine folk at Zutopedia pit Heap sort against Merge sort. And again, the results require a bit of interpretation. In their example, Heap sort performed significantly worse than Merge sort. But, if Merge sort and Quick sort were neck'n'neck, and Heap sort performed so much worse than Merge sort, why would anyone opt for it? Zutopedia's description gives the pros and cons. Heap sort uses much less memory than Merge sort, so if memory constraints are a concern then Merge sort is out of the race. But Quick sort is still faster than Heap sort on average. But that's the rub, it's only faster on average.

If your use case has a time constraint as well, Heap sort's execution time is more predictable than Quick sort's. Even though it is consistently slower than Quick sort's average, it's also consistently faster than Quick sort's worst case. This could be a factor in why some application may prefer Heap sort.

Well, what about us?

To my mind, Quick sort is the obvious choice. Low memory usage, faster than the others on average, and very very fast in the best case scenarios. It's not too hard to understand or implement. I think we've got ourselves a winner!

The Winner Is... Quick Sort

Apparently, I'm not the first person to arrive at this conclusion. This guy's answer over on StackOverflow goes into even more detail on why so many other commercial application's agree with my conclusion.

Implementing Quick Sort: Pseudo to PHP to 6502

I'm not a computer scientist, I'm a computer programmer. I can transcribe pseudo code into a real implementation lickety split. But, it would take me a lot longer to write something from a purely abstract description.

Fortunately, the Wikipedia article on Quick sort (aka Quicksort, as they spell it,) lists the following pseudo code:

algorithm quicksort(A, lo, hi) is if lo < hi then p := partition(A, lo, hi) quicksort(A, lo, p - 1 ) quicksort(A, p + 1, hi) algorithm partition(A, lo, hi) is pivot := A[hi] i := lo - 1 for j := lo to hi - 1 do if A[j] < pivot then i := i + 1 swap A[i] with A[j] swap A[i + 1] with A[hi] return i + 1

Hey, wow that's a pretty damn short implementation. My first step was to transcribe it as closely as possible into PHP. I did that, it only took a couple of minutes to do so, and it worked!

My second step was to refactor the PHP so that it more closely mirrored the way it would be implemented in 6502. Here's what that looks like, and below I'll give a few words about how it helps to transcribe from this to assembly.

PHP has the luxury of passing around many arguments to its functions. But in 6502 the array, and the zero page pointers are all effectively global variables. So I converted my first PHP implementation to using a global array of strings to sort, and a global variable for the pivot. The comparison function, for instance, only takes one argument. It always compares the argument to the global pivot.

While PHP is able to compare strings with a simple > or < operator, I opted to break it out into a separate function because on the C64 the comparator will need to be its own routine. This also allows one to easily sub in a custom comparator, which we'll see in a minute.

Additionally, in the partition function, I named the variables $x and $y because these will be the .X and .Y index registers in the 6502. Now let's take a look at my 6502 implementation.

To start with, it works! There is something perversely pleasurable about seeing something work on a C64, after the amount of work that goes into finely tuning the code by hand. I just love looking at the code, and thinking about how it works. It isn't about being pleased with my own abilities, but being pleased with seeing something inherently complex implemented and carried out by something, a machine in this case, that is so simple. Now I truly understand how a computer can sort data, down to the bits and registers.

Breakdown of the 6502 implementation

This implementation is just a proof of concept, the actual data to be sorted is just hardcoded into the bottom of the binary file. The program loads to $0801 and includes the BASIC prelude to jump into the machine code routine.

Setup

Lines 11-12: Only four bytes of zero page are required, and they are required to be in zero page for the string comparison routine. This uses indirect indexing to compare the strings. These ZP addresses are defined at the top as tmp, and piv. Piv stands for pivot, which is central concept in the Quick sort algorithm.

Lines 15-18: The comparator could easily have been hardcoded, but I wanted to show how easily an alternative comparator routine can be injected into the code. It can be done simply by writing the address of the routine into middle of the partition routine, at a label specifically set up to mark the location where the comparator is jumped to.

Lines 21-22: .X and .Y are the arguments passed into every call (it's recursive remember) to the quicksort routine. The initial values are hardcoded to the first and last indexes of the array. In a real use case one would need to get the size of the array by some other means.

For fun, I played around with insetting the initial bounds, and it works a charm. For example, imagine your array holds the names of files in a directory, but imagine that the first element holds the name of the directory itself, or the last element holds some stats about the directory such as blocks free or file count. You want to sort the files, but you want the first and last elements to remain in the first and last positions. It's super easy. Instead of setting the initial start index to 0, just set it to 1. And instead of the initial last index as length(array)-1, just set it to length(array)-2. Beautiful.

Line 25: This kicks the sort into motion, when this routine returns the elements between the intial bounds will be sorted. The lines that immediately follow, 28 to 53, end of the program by outputing the sorted data to the screen. I'll return at the end of the breakdown to describe this final step.

Recursion

Lines 57-87: Between the meat and potatoes of the algorithm, this routine is the meat. This is the only recursive part of the algorithm. It takes hi and lo array indices and implements the divide and conquer behavior of the strategy. If the two indices are the same, this branch has already been conquered and this call of the routine has nothing to do but return.

Otherwise the routine needs to attack this branch, which it does by calling partition. However, the partition routine is going to disrupt .X and .Y, so these need to be pushed onto the stack to preserve them. It is important that we push the hi index first, then the lo index, because upon return we will need the lo index first in order to divide and recurse into the lower half of the current range. Let's jump first to partition and see what it does.

Partition

Lines 91-123: Partition is the potatoes routine, it's the iterative part. It starts with the hi and lo indices that were passed to it from quicksort, and loops between them. First though, it has to pick a pivot, which is one of the elements between its hi and lo indices, inclusive, against which every other element in this range will be compared.

There is a lot written about how the routine ought to best pick the pivot. I've opted to use the Lomuto partitioning scheme, which you can read about on Wikipedia here. It is apparently less efficient than other schemes, such as the Hoare scheme. However, it is easier to implement than the others, especially on a 6502 with a very small number of registers, and it avoids the worst case scenario so it's not as bad as some other options.

In the Lomuto scheme, the element at the hi index is always selected as the pivot. At lines 94 to 97 the hi element is copied to the pivot zero page address. Again due to our limited number of registers, the .Y register, holding the hi index, is written to a compare argument at the end of the loop. This is technically a self-modification of the code, but it will make the code faster than writing this value to some other zero page address.

As we loop through the range of elements, we need one index that tracks where we are in that loop, but because we will be swapping elements along the way, we need a second index that indicates where the next swap will go to. These two have to be our .X and .Y index registers, and those are all we've got, hence why we had to self-mod the code for figuring out when the loop would be over.

On each loop the current element is copied to the tmp zero page address. Now we have two elements to compare. One in tmp and one in piv. So we JSR at line 109 to the comparator subroutine. In this particular case the elements being sorted are 16-bit pointers (more on this a bit later) to null terminated strings. The pointers have been copied into zero page addresses, so they are imminently ready to be used as pointers for indirect indexing into the strings.

Comparison

The comparator is a string comparison routine. The .Y index is required by the 6502 to perform indirect indexing, but .Y needs to be preserved for the loop index in partition. So the first thing we do, at line 151, is self-mod the code again to write the current value of .Y down to the bottom of the routine so it can be picked up again before returning. Then .Y is initialized to $FF in prep to rollover to 0.

The string string comparison works by incrementing .Y (the initial increment rolls it to 0), then we load a character from the string pointed to be tmp, and compare it to the character at the same index in the string pointed to by the pivot pointer. If the characters are equal, then we loop to fetch and compare the next characters. Eventually, when we find the first two characters that are not the same this loop will end, and the carry will indicate if tmp is smaller than the pivot. After restoring .Y to the partiion's loop index the comparator returns with the carry as the comparison result.

Uh-oh. I believe I have a bug in the strcmp routine. If the two strings are the same, they will match all the way to the end, including the null character and then the routine will go off the rails. It's unlikely to cause a crash, but I should add in a check to see if the strings have both ended. If one string ends before the other, then that's a difference that will be detected, and the zero (null) terminating byte will result in the shorter of the two strings comparing as smaller, which is the correct behavior.

Lines 110-112: After returning from the comparator, if the carry is clear the .X index is incremented to track the next swap position and then the code jumps to swap.

Swapping (It feels like the word should be "Swapition")

Lines 126-144: The swap routine is very straight forward. .X and .Y are the indices to swap in the array. Neither index register will be changed during the swapping process, so it's very simple. But, what are we actually swapping?

If all we were doing was sorting numbers, we could just compare the values of the numbers themselves and then swap those numbers directly. However, that's too limiting. I want to be able to swap structures. Well, let's say a structure is 32 bytes big, we'd be insane to exchange the places of all 32 bytes from one structure with another. I mean, what if the structures aren't 32 bytes but 512 bytes? Or bigger? It would be madness. Or, even stranger, in this example I'm sorting strings, but not every string is the same size. How would that even be possible?

A sort routine needs to sort the elements of an array, and in a proper array (a la the concept of an array in C, not a fancy hash-based "array" from higher level languages) every element must be exactly the same size. But since our strings each have different lengths the only thing that makes sense is that we build an array of 16-bit pointers. And each pointer merely points to the start of an arbitrarily long string.

The comparison is not performed on the addresses of the pointers, but rather on the data in the strings that those pointers point to. When one string is found to be bigger than the other, or smaller than the other, depending on how it works, then the pointers to those two strings are swapped within the array of pointers, and the strings themselves stay put.

In this example, the strings are hardcoded at the very bottom of the file. And the array is made of two tables, one for the hi byte and one for the lo byte which together constitute a pointer. The consequences of this will be discussed later.

Swapping the pointers is therefore performed in two steps. The hi and lo bytes at the .X index are copied to tmp, which is no longer needed. The pointer at .Y is copied to .X, and the value in tmp is copied back to .Y.

Whoops! I just spotted an optimization I can make. Tmp is already holding one of the pointers to be swapped. Swap can be made much shorter by copying .X directly to .Y, and then copying tmp to .X. I'll leave the code samples as is for now. And leave this note as a living witness to the fact that optimizations tend to pop out while scrutinizing any code closely.

End Partition

After looping over all the elements within the partition range, and swapping some elements above the pivot, the pivot will have moved, but is now in its final position.

Final resting index of the pivot defines a mid index in the initial range passed in the call to partition. That mid index is returned from partition in .Y.

End Quicksort

Lines 73-79: We finally are back to the meat routine. It now has a mid index, plus the lo and hi bytes of the range are on the stack. Here's where the recursive magic happens. We pull the lo byte from the stack, and stash the mid index. Now the stack contains the upper subrange from mid index to hi. And we've got the lower subindex from lo to mid index in .X and .Y. Decrement the mid index by one, because technically the mid index is exactly the index of the previous pivot, which is already in the place it is supposed to stay at, and bingo bango! Call quicksort again. The whole process begins again, but this time it's got a smaller range of indexes.

Lines 81-87: When the entire lower half is sorted, we're right back where left off, with the upper range in in the stack. Pull them out of the stack. This time the mid index gets pulled as .X, because it's the new lo index. And the hi index is pulled as .Y. Increment the mid index this time so it ends up one above the pivot. And bingo bango! Recursively call quicksort again to do the whole thing over again on the upper range.

Recursion still feels magical to me. When each quicksort call returns that's because that segment of the array is fully sorted. And when the original quicksort call finally returns, it's because the entire range, the range defined by the initial bounds that were manually passed in to kick things off, has been fully sorted. Love it!

Show your work!

Lines 28-54: Now the array of pointers is fully sorted, so they point to the strings in alphabetical order. In order to print them out, we'll create a loop over the array of pointers using .X.

The current pointer is copied to tmp. Then we have an inner loop using .Y as the character index into the string. Each character is output using the KERNAL routine chrout. If the string is longer than 40 characters, the string gets chopped off so it doesn't wrap on the screen. And the code jumps skips over the carriage return. If the string is less than 40 characters, a carriage return is output so the next string will start at the beginning of the next line.

And we're done! Here's how the output looks: (Spoiler alert, they're in order!)

Some final thoughts

There are a few limitations on what this routine will pull off. But, I believe the limitations will be broad enough for most of the cases to which I intend to put it to use. The first place I will be using it is in sorting directory entries that have been loaded into memory and formated into directory entry structures, which I'll discuss in more detail in a future post.

The code assumes that the array pointers all fit within a single page (although, technically, they do not need to be page aligned, it would be more efficient if they were.) That is because the .X and .Y registers of the 6502 are only 8-bit. And the the pointers are split between two pages. This allows sorting of a maximum of 256 structs.

In practice, I'm not sure if it will be able to sort that many, because it could run out of stack space in the process. I have not yet run any the stack usage numbers.

The test program shows it to be very fast. Sorting the 22 sample elements is effectively instant. Even if a directory contained up to 200 files, the sorting should be a negligible amount of time relative to the amount of time it takes to actually load the directory data from disk into memory. However, this also bodes well, because not every sort will immediately follow a load from disk. Once a directory is loaded in, the user may change the sort of the data already in memory from filename to file size, or file type. These subsequent sorts should be very fast.

A quick aside on reversing the direction of a sort. It is entirely unnecessary to take an array, say of directory entries sorted by filename, A to Z, and re-sort it in reverse just to display it on screen in the order Z to A!! If the array is already sorted A to Z, and the user opts to flip the direction, one merely needs to change the index. When it's sorted A to Z the indices run from 0 to count(array)-1. When the user flips it over, you take the index and change it to: count(array)-1 - index.

Let's say the array has 100 elements. The index are 0 to 99. But in reverse, the index of 0 gets transformed to count(array)-1 -index, or 100-1 -0, that's 99. So 0 goes to the end. But an index of 99 becomes 100-1 -99, that's 0. So 99 goes to 0. In other words, the actual sort algorithm never needs to take sort direction into consideration in the comparator. The comparator can always sort the array from smallest to biggest, and your table rendering code can modify the index when it looks up which struct to render to the screen as the user scrolls through the data. Sweet.

In the comparator routine used in this sample code, it is only able to compare the first 256 bytes of a string. In most cases, this is more than enough. We're not comparing paragraphs, we're comparing filenames, which are just 16 characters.

Finally, the whole Quick sort routine is only about 147 bytes, and it'll get smaller when I make the swap optimization. That's pretty great. Although, I'd like to note, the need to sort arrays is fairly rare. So this routine will not be included as part of the C64 OS core code. I'll likely include it as source in the distribution of C64 OS. It will be assembled into the application code of those system apps that need it, such as the App Launcher and File Manager. And it will be available as source for anyone else who wants to have a convenient sorting algorithm for their own C64 OS apps. But it will not remain resident at all times.

I think that's all I have to say! Please leave your questions, comments and corrections in the comments below. The sample code can be downloaded, assembled as a .PRG below.

DOWNLOAD: QuickSort6502.prg- You need more than 16 bytes, because you have a 16 byte filename minimum, plus you need some bytes for size and type at least. But, a page of memory only divides evenly by so many numbers. The next smallest number after 16 that divides into 256 evenly is 32. Likely these 16 bytes can be sensibly used to hold all the metadata about a file that you'd want. The even nature makes the memory management and allocation much simpler. [↩]

Do you like what you see?

You've just read one of my high-quality, long-form, weblog posts, for free! First, thank you for your interest, it makes producing this content feel worthwhile. I love to hear your input and feedback in the forums below. And I do my best to answer every question.

I'm creating C64 OS and documenting my progress along the way, to give something to you and contribute to the Commodore community. Please consider purchasing one of the items I am currently offering or making a small donation, to help me continue to bring you updates, in-depth technical discussions and programming reference. Your generous support is greatly appreciated.

Greg Naçu — C64OS.com