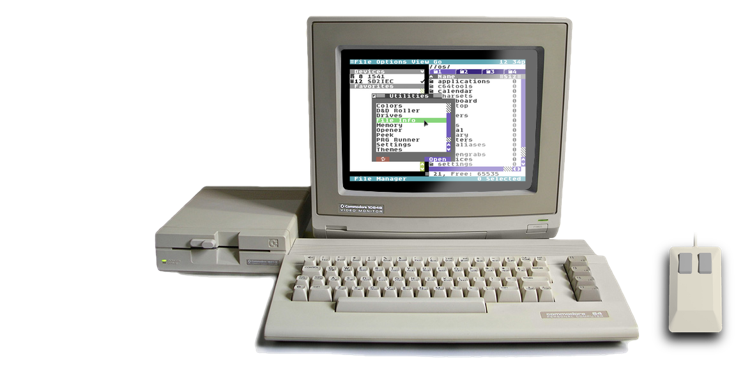

NEWS, EDITORIALS, REFERENCE

Memory Manager Development

Way back near the beginning of this blog, in a post called A Few Thoughts About Memory Management, I argued that even a single-tasking operating system, like C64 OS, needs to have something akin to a modern memory manager. And that what the Programmers' Reference Guide describes as memory management is more like a ten-thousand foot overview of how general areas of the C64's 16-bit addressing space are used. Plus a few basics are covered, such as how to use the 6510's processor port to bank ROM and I/O in and out in place of the underlying RAM, or how to tell BASIC to use less RAM so you can safely stow an assembly language program up where BASIC might otherwise try to overwrite. However, all of this as interesting and useful as it is, is not what we think of as a memory manager today.

Memory Management on PCs/Macs

The the follow-up post a week later, A Hybrid Memory Manager, I go into some detail about my plans for a memory manager in C64 OS. Along the way I describe a few tidbits of how memory management works on PCs with lots and lots of RAM, certain problems that exist with all memory managers and how those problems are sometimes mitigated on big boy computers. But also how I might go about solving those problems in C64 OS.

I want to start here by going over a few things that I said in my earlier posts and correct myself. First, it is true that the C64 does not have virtual memory, and that PCs use virtual memory in such a way that a program sees the address space it is working out of as different than the physical addresses, that perhaps the CPU sees. However, it turns out that I was a bit off on what virtual memory can do. I thought that it presents each app with the same memory address space, even though on the underlying hardware that memory space may be shifted way up higher in physical memory. It is actually quite a bit more sophisticated than this. If all it did was some sort of a single-range-based shift, fragmentation problems—which I know are an issue—would not really be improved by the virtual memory mechanism. In fact, virtual memory mechanisms remap memory in a much more piecemeal way than I thought they were capable of. And this really helps alleviate fragmentation issues.1

The next thing I wanted to correct is that I said memory is allocated with one mechanism in modern OSes, and that if you want a megabyte, you can ask the OS for a megabyte and it serves it to you out of the same region of memory as if you ask for 2 bytes. This is not entirely right. Fragmentation is still an issue that affects modern memory managers, and one solution to the problem is to divide up allocations into different kinds depending upon their size or their expected lifetime, or both. And allocating those different kinds out of different regions of memory. For example, if you were going to allocate lots of large but temporary blocks of memory AND you were going to allocate lots of small chunks of memory that will be long lasting, you can alleviate fragmentation problems by not allocating those two things out of the same region of memory. To see why, consider what might happen if you allocated like this: First a one megabyte block. Then a 10 byte block. Then a 2 megabyte block. The three blocks would end up in memory back-to-back. Then imagine you deallocate the 1 meg block, and then the 2 meg block, but leave the 10 byte block allocated, because it's long living. The problem is that what is effectively a 3 megabyte space is split up by the presense of that little 10 byte block that may hang out for a long time. Even a sophisticated coallesing allocator won't help, not until that 10 bytes gets deallocated.

As I learned, the underlying virtual memory hardware can work around the above described situation, but I don't think it's ideal and it may be less efficient. Instead, if you know the different characteristics of the allocations, such as that some will be long-lived and others short-lived, you can allocate them from different places. In this case what happens is that you allocate 1 meg from region A. Then you allocate 10 bytes, but from region B. Next you allocate 2 megs from region A. The 1 and 2 megabyte blocks end up back-to-back in region A, and the 10 byte block is off somewhere else in region B. If you then consequently deallocate the short-lived 1 and 2 megabyte blocks, the free space can be coallesced back into a 3 megabyte contiguous block. There is a technical name for these regions from which allocations can be made, they are called memory pools. And this is more or less exactly the idea I had in mind when I described a "hybrid memory model." Which I'll describe in more detail below.

False efficiencies

I'm still relatively new to programming in 6502. There are lots of little tricks that I am

learning on IRC and by studying other people's source code. One such trick I just learned was

checking if a 16-bit pointer is null (both bytes are zero) using a combination like:

LDA #<pointer ORA #>pointer BNEwhich I covered in my recent post Making Course Corrections, Timers. It's a clever little combination of instructions that, upon seeing it makes perfect sense. But prior to seeing it I was thinking that I would have to first do an LDA with the low byte, then a BNE, then, if it was zero, I'd have to do another LDA with the high byte and another BNE. A small difference to be sure, but it's just an example of small efficiencies you learn with experience.

The memory manager in C64 OS is one of the first things I knew I wanted to write. And in fact, after the OS's main jump table, the memory manager routines are the first code module, which is to say that after the jump table the memory manager resides highest in memory. It is safe to say that when I wrote those routines I was just barely cutting my teeth on 6502. It might seem like a crazy thing to start with. (A freakin' memory manager!? My first 6502 code??!) But, I'm already a pretty experienced programmer so it was just a matter of transferring my understanding from higher level languages into assembly. It was a fun exercise.

When I first began writing in 6502 and planning C64 OS, I was unnaturally obsessed2 with the smallness of my code. I think switching from web development where you have a virtually unlimited amount of memory to endlessly add new features, it was a shock to my system to even imagine how I could fit all that I want to fit into the C64 OS Kernal into just 4K (which I have now expanded to a much more realistic goal of 8K.)

In C64 OS's memory manager there are routines to allocate and free pages. Where a page is a 256 byte block aligned such that the first byte is $XX00 and the last is $XXFF. There are 256 such pages in a 16-bit address space. 256 x 256 bytes makes 64 kilobytes. So my thinking was to have a map of which pages are free, much the way the BAM (block allocation map) works on 1541/71/81 drives and CMD native partitions. They use 1 bit per block. If I used 1 bit per page I'd need (256 / 8 bits = 32 bytes) 32 bytes to map the entire memory space. Surely, I thought, using 32 bytes instead of 256 has got to be 8 times more memory efficient!

Initially I thought I'd make the page allocator really simple. You call it, you get a page, done. The program would just look for the first high bit in the map, set it low and return the corresponding page address. I implemented that, but I quickly realized that there are situations where you absolutely want to have multiple pages that are guaranteed to be consecutive. If you call the routine several times in a row, you will get several pages but they might not be back to back, depending on the history of allocations. So I changed pgalloc to take a count. Now the code looks through the map for that many bits high in a row, sets them all low, and returns the address of the first page of the range. pgfree was also changed so you can pass the first page and a count and it will lower that many bits in a row in the map. This feels like a reasonable progression of thought and development.

As it turns out, manipulating bits in 6502 is kind of a pain in the butt. It certainly isn't free, it requires code. And every byte of code extra that you need is one less byte of advantage you thought you were getting by using a 32 byte map instead of a 256 byte map. There is also the added mental overhead in the complexity of the code, and it's much easier for there to be bugs in complicated code. When I started to write the Memory Managed Loaders I quickly realized that when loading executable code that cannot be relocated, I was going to have to do more complex bit manipulation in the memory map. I knew it was going to be such a pain that I didn't even want to do it. I just kept procrastinating.

Then I had a sudden flash of insight. Maybe using bits instead of bytes wasn't such a great idea after all.

The C64's Memory Map, Under C64 OS

There is 64K of RAM in a C64. But there are restrictions on accessing parts of it, and it's divided up under different areas for different purposes. What this means is that memory that the memory manager is able to hand out is restricted in some ways, it can't just serve out of the whole 64K. Let's see what that looks like again, but this time showing what I might use these areas for in C64 OS.

+--------------------------------------------+ | $E000 - $FFFF - KERNAL ROM 8K | | - BITMAP SCREEN BUFFER | | - MUSIC BUFFER | | - UNSTRUCTURED FREE SPACE | +--------------------------------------------+ | $D000 - $DFFF - I/O 4K | | - MEM MAP, SCREEN BUFFER | +--------------------------------------------+ | $B000 - $CFFF - C64 OS KERNAL 8K | | | | | | | +--------------------------------------------+ | $0800 - $AFFF - PAGE ALLOCATED SPACE 42K | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | +--------------------------------------------+ | $0000 - $07FF - SYSTEM, STACK, SCREEN 2K | +--------------------------------------------+

The KERNAL rom is 8K of code and constants, but no variables, because the nature of a ROM means it can't vary. This means it has to references all of its variables out of RAM somewhere. The same is true for the BASIC rom (although C64 OS has BASIC permanently patched out.) These roms use the first 2K of space for all their variables. With BASIC patched out, C64 OS's KERNAL has plenty of nooks and crannies throughout that first 2K for its own pointers and variables. These variables are collectively referred to as system workspace. The first 2K also contains the hardware stack, and the VIC is configured to have its screen memory in there. This includes the sprite pointers and the sprite data for the mouse cursor. For obvious reasons, we won't be allocating memory pages out of the first 2K. Together that's 8 pages. (2048 bytes / 256 bytes = 8 pages).

C64 OS's KERNAL occupies all of HI MEM, that's the 4K that is $C000 to $CFFF, right before where I/O starts. I originally, crazily, thought I'd try to fit everything in just 4K. I've almost filled 4K already, and I have a lot more to do. I've decided to try to fit everything in 8K instead. So the C64 OS KERNAL will extend down another 4K, that's halfway through where the 8K BASIC rom sits, but it's patched out. We obviously won't be serving pages out of the 8K from $B000 to $CFFF. That's 32 pages.

The memory under I/O can be used but it is has limitations. When I/O is mapped out, the I/O chips themselves continue to function but they can't be read from or written to by code. So, naturally, you cannot put executable code under I/O that itself attempts to use I/O. The VIC chip continues to function and produce video output. But without access to I/O you cannot change its registers, including updating the positions of sprites, for example. Similarly, you cannot scan the keyboard or check for joystick input via code that's under I/O. Nor can you write code that accesses the serial bus or the user port, or tries to adjust registers in the SID chip. As a consequence of these limitations, it doesn't make a lot of sense to me to have the memory manager hand out pages that are under I/O. However, there is free memory available underneath I/O. It just has to be used carefully. It is ideally suited for data that is not code, or possibly code of a very specific variety that does not need to use I/O, and that will not call anything in the KERNAL that might subsequently call anything in I/O. So, it's delicate, it has to be planned, it can't just be handed out as if it were any other uncontentious area of memory. Okay, that's 16 pages.

Lastly, at the very top of memory, the last 8K following I/O is the C64's KERNAL rom. A somewhat similar situation exists with the KERNAL rom. You can't call KERNAL code from code that is executing in ram that is under the KERNAL. Nor can you call any code in, say, the C64 OS KERNAL that itself will call code in the KERNAL rom. Reminds me of I/O. There is a giant patch of 8K of ram tucked away under that rom, but how it can be used is delicate. For this reason, just like with I/O, the page manager cannot just start handing out pages to application code that asks for some space as though it were any ordinary uncontentious area of memory. The program that gets a page under the KERNAL would have to be aware of that and take steps to patch the KERNAL in and out. But when the KERNAL gets patched out, something needs to handle the interrupts. It gets complicated. That's another 32 pages.

What we're left with is a sizable 42 kilobyte chunk of contiguous memory, smack between the end of system workspace, and where the C64 OS KERNAL starts. After the C64 OS KERNAL we have two specialized areas, 4K and 8K respectively. It is only from that 42K space that the memory manager will allocated pages. 42K is 168 pages. (42 * 1024 / 256 = 168).

Unexpected Gains

So what we have is 8 pages of system space, 168 pages of application space from which the memory manager tracks and allocates free pages, 32 pages of C64 OS KERNAL, 16 pages of special ram beneath I/O, and 32 pages of special ram beneath the KERNAL rom. 8 + 168 + 32 + 16 + 32 = 256 pages. It all adds up.

I realized pretty early on that it makes a lot of sense to store the memory map (for page allocations) under I/O. It's a perfect fit, an availability map is by definition just a bunch of data. The routines that need to get to it can just disable interrupts, patch out I/O, do a quick read/write to the memory map, patch I/O back in and restore interrupts. Originally, I tried to conserve space by making it a map of bits instead of a map of bytes. But now that we know about the memory limitations, we see that by doing so I was conserving memory in a 16 page region that is not ideal for many types of code. With a consequence of enlarging and complicating the routines necessary to access it which take up precious space in the 8K C64 OS KERNAL. And, I hadn't even gotten around to writing the memory map manipulating code that would be necessary in the loader.

After my flash of insight, I switched the memory map over from bits to bytes. In the worst case scenario, I would need 256 bytes, one for each page. The map itself would require one page to represent all the other pages.

There is one other change I wanted to make, that I was not looking forward to rewriting in code that manipulated the individual bits. Originally, pgalloc would hand out free pages starting at the bottom of memory. Aka, it would find and hand out the page with the smallest address it could find first. The problem is that it is easiest to assemble a program by starting at a known low address. Let's say starting at $0800 and consuming memory going up from there. If I then started to allocate memory from the bottom, the first page allocated would be in the middle of the space, immediately following whatever code had been loaded in. This makes it difficult to load a second program overtop of the first. Let me give you an example.

Right from the beginning I've had the goal of having C64 OS provide a standardized open and save dialog box. One that lets you navigate the file system, switch between devices, sort the directories, create new directories, rename files and directories, etc. There isn't enough room to fit this functionality into the always-resident C64 OS core code. Many programs will not need this functionality, and it's likely to be sizable code, several kilobytes minimum. The way to get this feature into C64 OS is to write the open and save dialog boxes as separate programs that can be loaded by the OS on demand. Then they do their thing, and return their result as a file reference structure (I'll discuss these in a future post,) somewhere in memory. Control then returns to the main program which can pick up the information from the file reference and use it to know from or to where to open or save a file.

If, say, the file open dialog program loads to $0800 it will overwrite the existing resident program. However, the existing resident program can be restored by reloading it from disk later. What is not so easily restored is its allocated in-memory state. If a program's allocated memory immediately follows its code, then the total memory space is "fragmented" by the program's own allocations. Standard fragmentation issues ensue. If the file open dialog program is larger than the main program that is invoking it then there is a problem. The file open dialog won't fit without overwriting part of the program's allocated state memory. I realized that it would be much easier and all sorts of clever design opportunities would open up if these two kinds of stuff in memory filled in from different directions. Program code loads low in memory, starting say at $0800. And the pages it allocates come from the top of memory, from say $AFxx down. This leaves a big empty buffer right in the middle. Many many applications, such as the ones that I have in mind, may never close that gap. Thus making it really easy to load "desk accessory" style applications, or OS utilities (like open/save dialogs, settings utilities, data sharing services, music player controls, etc.) overtop of currently open programs.

The advantages to these switches are huge and some of them unexpected. It is so incredibly easy to check for zero or non-zero bytes in the map. You simply set the .X register to index into the end of the map such that .X = #$AF represents page $AFxx. Initialize the .Y register to 0. LDA memmap,X in a loop, decrementing .X and counting the free pages with .Y. When .Y is up to the number of pages you want .X is already set to the page that represents the start of the requested block!

The code was so dramatically simplified that I saved 141 bytes in the implementation. Now, bear in mind that the memory map only needs to represent all 256 pages in the worst case scenario. In practice I'm only allocating out of 168 pages. Represented as bits, the map was 21 bytes. (168 / 8 bits = 21 bytes). Represented as bytes, the map needs to be 168 bytes big. The map in bytes is bigger, but it's bigger by only 147 bytes, and I saved 141 bytes across the implementation of pgalloc and pgfree! For a net loss of space of just 6 bytes, a negligible amount of space. But, it's much better than that. Because the 141 bytes saved is saved inside the precious memory space of C64 OS's KERNAL. And the extra 147 bytes of the map are underneath I/O where it's hard to figure out how to use that space. It's basically pure win.

I used all of the new found space in the C64 OS KERNAL to write the code for malloc and free, which are the routines for arbitrary length memory allocation from a memory pool. Where a memory pool is a set of contiguous pages previously allocated by pgalloc. Now I can actually look forward to the ease with which I'll write the memory map manipulation code for the loaders. And I will go into more detail about how I implemented malloc and free in another post.

Additionally, I was considering having a routine called meminit that would have to be called on a memory pool before it could be used with malloc. But with the saved space, and the much shorter execution time to allocate pages, I decided to roll that functionality directly into pgalloc. Now, any set of pages allocated by pgalloc is immediately ready to have malloc called on it. I even had enough free space to implement a memfree routine, that returns the count of unallocated pages.

The byte-wise memory map, needs 168 bytes to represent the 168 pages. And so it does not in fact consume an entire page of the 16 pages behind I/O. That leaves 8 bytes before the map, and 80 bytes after the map of free "special" space to be used by other features of C64 OS. Plus an additional 15 full pages. Some hints about how I may use this space appear in the notes in the memory map diagram above. However, I will also go into detail about how I plan to use the space, as well as how to use the "special" space behind the KERNAL rom in a later post.

The moral of the story here is that sometimes what seems like a good idea at first, can turn out to be not so smart after all. Saving space in variable storage at the expense of more complex code is not always worth it. In some cases, like above, it's actually much worse.

Happy 6502 programming everyone! If you have any thoughts, suggestions or corrections, feel free to leave them in the comments.

- Many things have been written about memory management and fragmentation, but I found the answer to this StackOverflow question helpful. [↩]

- As opposed to now, when I am merely "Naturally Obsessed." Cenbe on #c64-friends surely still thinks I'm off my rocker with my questions about whether it's faster to JMP() to an RTS, or to check if a JMP's pointer is null. [↩]