NEWS, EDITORIALS, REFERENCE

Raster Interrupts and Splitscreen

Welcome back. Hopefully this post will get technical with some useful information, but not so archane that it'll turn away more casual readers. A fine line to walk, but we'll see where we get.

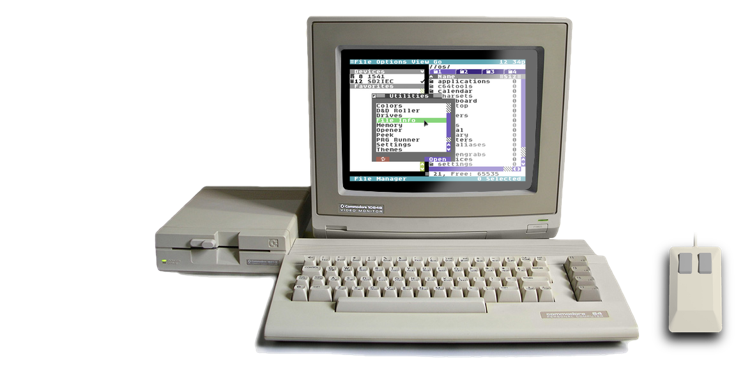

Not too long ago I tweeted out my excitement about getting the splitscreen mode in C64 OS working. On the tails of that I put splitscreen to work in the NES Tester application and shortly after in Chess. Both of these applications have more than one purpose. Besides making use of split screen, working out its bugs and seeing what we can pull off, the NES Tester is useful for testing my NES Controller mods (see here and here) and also for experimenting with OS drivers for 2 and 4 player game controllers. Chess, as I outlined in my recent post C64 OS Networking and Chess, displays a graphical game board, but is also a place to experiment with networking, drivers, communications protocols and so on.

I want to dig in to what I've learned about the VIC-II, raster display updating, and more, and discuss what was necessary to get splitscreen working. But along the way, I've learned so much about the history of computers that I can't pass up the opportunity to talk about some of the details. I love the cake of computers, but I find their history to be a particularly tasty icing.

What part of a computer is actually a computer?

It's totally natural to think of a computer as a device that displays images and allows you to control it using an input device like a mouse, keyboard or touch screen. This intuition is so powerful in us, it can be hard for people to imagine a computer as anything less than this modern collection of devices.

A co-worker last week spilled a full cup of sugared tea into his desktop computer's keyboard. Oops. That can't be great for your keyboard. But on the other hand, it's hard to imagine how it could damage the computer proper. The USB cable only carries 5V, and the data lines are 5V. Even if you directly shorted the +5V power line to the data line, it would interfere with communications, but it wouldn't damage the computer.

Fear that damaging the keyboard might also damage or destroy the computer reminds me of the hilariously campy scene from Star Trek Deep Space Nine, when the station's computers go into lock down after an old security protocol gets activated and goes awry. To stop what the security system perceives as a full-scale take over of the station by terrorists, it announces that it will pump poisonous gas into the atmosphere. Realizing that the gas can only be distributed by the life-support system, they reluctantly conclude that destroying the station's entire life-support system is the only way to save themselves. So to carry out this audacious plan, Major Kira picks up a phaser (an energy beam weapon) and shoots one of the computer consoles!

This is funny to me, because in no world would merely shooting what amounts the keyboard and screen of a computer magically accomplish some very specific goal, like disabling a whole system. Especially a system that is as critically important as life-support on a space station. But the fact that the writers wrote this, and that it seems plausible within the flow of the story, underlines the questionable intuitions that people have about where a computer ends and its peripheral devices begin.

I hate to be the bearer of bad news, but a computer doesn't necessarily display anything, not even text. It doesn't necessarily produce sound, much less music. And it doesn't necessarily take interactive input from the user. A computer computes. And despite modern usage, the first definition that pops up, in the first dictionary I checked, still agrees with this essential definition:

computer. n.

A device that computes, especially a programmable electronic machine that performs high-speed mathematical or logical operations or that assembles, stores, correlates, or otherwise processes information. The American Heritage Dictionary of the English Language, 5th Edition. Via Wordnik

If we start there, a computer seems to consist of, at minimum, a set of information processing logic. We think of this today as the CPU, but of course, long ago, the processor was spread out across many chips, and before that across many individual transistors or tubes. It also needs some form of memory, so that the logic processing unit has a place to retrieve instructions from and data to run the operations upon. As for high-speed, well that's a relative term. Even Eniac, the first general purpose digital computer, which was finished in 1945, despite being laughably slow by today's standards, could perform 2,400 hours of human work in just 30 seconds. Put in these terms, even Eniac is high speed.

If you could build a machine that could take the work that a person would need over a year to complete, and could do it in just 30 seconds, it would be the most epic breakthrough, a giant leap forward in the history of the engenuity of the species. And a huge advantage to those who had the technology over those who did not. And of course it was each of those things and more. Computers have gone on to reshape the world.

Television vs Printer

But wouldn't it be convenient if you could see some real time representation of what was going on in the computer? I assume you know that computers in the earliest days used punch cards as their storage medium. It was how a programmer wrote a program and fed it into the computer. Holes in the card could be read as a digital bit value 0, that was different from the absence of a hole in the card that could be read as a digital value 1. (Or perhaps it was vice versa.) But it was a surprise to me to learn that punch cards were much more important to Eniac than just the old version of a ROM chip. The first version of Eniac actually didn't have any electronic memory. It had no input devices, no output devices and no electronic memory. It used punch cards for all three of these purposes.

Punch cards were how you put data in. Punch cards functioned as its working memory. And punch cards are how it output data back to the world, by punching the results of a computation into a fresh card. The data on the output card could either be manually interpreted by looking at it, and translating the data with a pencil and paper, or they could be translated to printed text by feeding them into another machine. That other machine pre-dated computers and therefore was not itself a computer, but could read punch cards and mechanically convert their data to more human-friendly printed output.

Meanwhile, television was already underway. Mechanically scanning a static image, transmitting the information over wires and then remotely reproducing the image on a sheet of paper had already been accomplished in the late 19th century. It turns out the mechanical version of a fax machine is a spiritual ancestor of so many of the things we love today. Who would have guessed? The question around the turn of the century was, how to make an electronic version of this early fax machine. This wasn't just an engineering problem, it was a deep physics problem. What chemicals, substances and materials can be excited in a controlled way by electricity to cause them to light up in prescribed patterns? Oh, I know! Let's try using a phosphor coated glass and shooting it with a magnetically directed electron beam emitted from a cathode ray tube! It sounds insane, right? It sounds like sci-fi mad scientist techno babble. But, of course, this is how television began. The trusty old CRT display, born from physics genius.

It wasn't all that many years, a few short decades, before the ability for electronics to produce an image on a screen got married up to a standard use of the radio spectrum, under the direction of research and standards committees which transformed the technology into broadcast television and the birth of the broadcast television industry. A technical concern of the time was how much radio bandwidth to use for a commercial broadcast channel. You have to have audio and its quality and number of channels (mono, stereo?), and you need to consider the size and resolution of a single frame of video, and you also need frames per second. And of course color was also coming down the pike soon after. Somehow they had to pack all that data in a standardized radio frequency band range, such that more than one channel of television could be broadcast simultaneously.

It reminds me a lot of the decision making that went into digital audio compact discs several decades later. If you know the storage capacity of the medium, and you have some marketable target, like 1 hour of audio on one disc, then you can work backwards and figure out what quality of audio you could use to reach the target given the constraints. Things like 16-bit and 44.1Khz are arbitrary decisions. Why not 88.2Khz? That'd shut up the vinyl diehards, right? The reason is because at that rate you could only fit half the length of play-time onto the same disc. It was a lot like this for video being broadcast within a range of radio frequencies. There is only so much radio spectrum. And we didn't want to dedicate it all to a single television channel, or we'd all have been stuck watching I Love Lucy.

There were tradeoffs to be made, if you give a little here you could take a little there. So we ended up with standards like NTSC in North America, with 525 interlaced scanlines per frame and 30 frames per second. This was introduced in 1941, a full 4 years before even Eniac was available. A few years later PAL was introduced in other parts of the world with 625 interlaced scanlines per frame and 25 frames per second.1

The case of the vanishing pixel

For the first decade or so all broadcast television was live. But what does it really mean that TV was live? You had a real-life subject, like a news anchor or an actor, and you had an analog camera capturing the subject. The input was converted directly into the video signals, which were then boosted and transmitted as radiowaves into the air, immediately. The television set itself was similarly analog. It received the signals from the air on its antenna, and the signals were used in real time to drive the electron beam across the TV set's phosphor coated glass screen.

Eventually film was invented that could record television signals to reproduce them later. This was initially very expensive, the equivalent of thousands of dollars per hour of footage. However, even if TV was not being performed live, but was being sourced from some very expensive analog recording on film reels, it was still a live broadcast. This is fairly obvious to anyone old enough to actually remember broadcast analog television, but is completely foreign, for example, to my children who are both under 10 years old.

What do I mean by live broadcast? Television became available years before the first commercial computer, and even a few years before the first digital computer of any kind. Clearly, television was electronic but very much an analog technology. The signal being transmitted was not generated by means of computation. More importantly, the device receiving the signal was not performing any computation, and had no memory of any kind, digital or analog. The television set simply receives the radio signal, wave after wave, and that signal is converted in real time by the electronics necessary to drive the electron beam forward. You can get some idea, from the block diagram above, of how immediate this whole process was.

The beam passes along the screen in a (slightly inclined) horizontal line, sweeping from left to right and causing the phosphor to vary in its luminance at the point it's striking as it goes. When it finishes one line, it returns back to the left hand side one line lower to begin sweeping across and displaying the next line. In the NTSC standard then, it would trace out 263 lines, then a signal comes in that sends the beam back up to the top left corner of the screen, and it traces out 262 lines, offset by a half line from the first 263, and then a signal comes in to send it back up to the top left corner again to begin the next frame.

263 + 262 is 525. But, why odd and even numbers of rows? Why not the same number of rows in each field to build up one frame? The reason is quite clever. It allows the vertical return distance of the beam to be the same for both fields and yet always position the beam at the correct start location, ready for the next field. I drew this out on paper to see for myself how it would work.

In my example I used 9 scanlines total, but the point is that it's an odd number. For any odd number it can be made N for one field and N-1 for the other field. What's cool is that the vertical return distance (A) from 5 to 6 is the same as the vertical return distance (B) from 9 back to 1. They can thus cycle back and forth like this indefinitely. Very clever.

But the real trick, the most important point about how a CRT works is that the moment the beam has passed by a certain point on the screen and illuminated it, that point immediately begins to fade.

This has two consequences. The first is that, this is how you actually get a changing image. The beam cannot cause a point on the screen to become dark. It can only cause a point on the screen to light up. All points on the screen naturally decay towards being dark. And the beam lights up new points on each subsequent frame, by supplying the phosphor with a fresh dose of energy. But the second consequence is that the signal has to be live. A television set is not a computer. It has no memory. Once the beam is pushed past a certain point on the screen, not the TV set, not the broadcast radio signal, not even the original live broadcasting station has access to what that point was. It's now all in the past. What this means is that a television set cannot simply display a static image. Even though you'd think that displaying an unchanging image would be easy, or that somehow a TV ought to be able to do so passively, this is totally not the case.

Imagine you analogize to a sheet of paper that can show one image and a stack of paper in a flip book that can show a moving image. It's obvious that you would need more and more paper to show longer and longer animations, but you would only need a single sheet to show a single image. Unfortunately, this analogy is completely wrong. A CRT display is like a flip book whose pages are always turning. To see a static image, you still need the stack of paper, but you have to draw the same image on every sheet.

For an analog CRT display, like a TV set, displaying a static image is equally active and complex as displaying a moving picture. Each new pixel of each subsequent frame of even a static image needs to be retrieved anew from the radio signal coming in over the air, just the same as a moving picture requires.

And this is the case of the vanishing pixel. The phosphor is an inert material. It can't just emit light forever. You have to charge it by smacking it with some electrons. Then it loses that energy by emitting light for a short time until it becomes de-energized and gradually goes dark again. Each pixel keeps turning itself off. The only way to keep a pixel lit up is to keep poking it with more electrons on every frame.

A digital display

Returning to printing vs television, it starts to become obvious why computers weren't connected to video display monitors for over two decades after the two co-existed. To print, a computer could generate the output data at whatever leisurely pace it required. A computer could spend hours churning away on some mathematical problem, and in the end send just a few bytes of data to the printing mechanism.

By contrast, in order to generate a video display matrix, a computer would need to digitally produce the video signal for every pixel in a frame, frame after frame, continuously, in real time, for the whole display. It doesn't seem like this would be hard. But it's just a matter of doing a bit of math to figure out how hard it would be. The NTSC standard draws 525 lines, 30 times a second. Or 15,750 lines per second. Imagine now that you wanted to have 100 pixels of horizontal resolution. That would be enough for somewhere between 12 and 25 characters of text per line, depending on how many pixels you devoted to a character. A very modest 2 to 4 words of english text per row. But while there are 15,750 lines per second, you would need to vary the output signal 100 times per line to get 100 pixels of horizontal resolution. Which means you would need to vary the signal at a rate of 1,575,000 times per second, at least in short bursts.

Given that the smallest unit of processing precision is the clock cycle, to accomplish 100 pixels of horizontal resolution, at an absolute bare minimum, you would need a clock rate of ~1.5 Mhz. Meanwhile, Eniac was clocked at… are you ready for it? 100 KHz. A hundred thousand cycles per second is wicked fast when compared to the rate of a human being performing math and logic operations with a pencil and paper. But it's 15 times slower than the bare minimum necessary to push a resolution that is practically useless it's so low.

That's not the only limiting factor to a video matrix display, though. To generate a stable computer image, remember, the same output signals need to be produced over and over for every frame. To digitally generate the display signal, unless you're just algorithmically producing a repeating pattern, the data have to be retrieved from memory. For a 100 by 100 pixel matrix, you'd need access to at least 10,000 bits or 1.22 KB of data. For the earliest computers, they didn't have any electronic memory, and when memory was invented it was exhorbitantly expensive. And what we're not even considering here is that if the CPU is used to generate the video signal then every cycle it spends producing that signal is a cycle not spent doing productive work. You'd need a machine 15X faster than Eniac just to hold an output video display stable, and it would have no time to do any actual work.

Over the next decade or two, into the 50s and 60s, computers got faster and more institutions, such as research universities, started to have them. They gained electronic memory. It was very expensive, but it was memory. Meanwhile, teleprinting machines had existed long before computers. A teleprinting machine was an electromechanical device with a keyboard that let the user type a message, which was coded and sent electronically to another machine. The other machine would decode and print the message. These teletype machines were refitted to exchange data between the computer and the human operator.

Ken Thompson (sitting) and Dennis Ritchie on a PDP-11, probably programming Unix. There is no screen! He's looking at a roll of printer paper.

It wasn't until the very end of the 1960s that the first video terminal was introduced, and it took several more years for them to push the older teletype-style machines out of the industry. The video terminal was conceived as a teletype machine whose output was to a video screen rather than to a printer. The genius of this move is that the computer itself was not directly involved in the production of the video signals. In fact, from the perspective of the computer nothing had really changed. It could send bytes of data out to a TTY-device, and whether that device printed the output to a roll of paper, or whether that device maintained its own memory and generated a video display representation of the data, made little difference to the computer proper.

A general purpose computing processor, while it could in theory be used to produce a digital video signal, wasn't put to that purpose as it would have been a great waste of a rare and expensive commodity.

The computer—the logic processor, its memory and storage—offloaded video display capabilities to dedicated hardware video terminals. The early terminals themselves were not computers, but contained logic in hardwired circuitry that was capable of displaying a stable video matrix. The computer would then send the individual bytes of characters, in some standard code like ASCII, to be displayed. The terminal contained just enough memory to store the characters to display, and together with a character set ROM could continuously generate the video signals needed to hold the image on the CRT display, frame after frame after frame.

The birth of the video controller

While doing my reading on this history, I was surprised by how late video displays actually came to computers. Video terminals only became truly popular by the mid 1970s. But, the Apple II and the Commodore PET home computers were available in 1977. There is scarcely five years or so between when video displays became available on the most expensive computers on earth, and when home computers, affordable by individual people, were able to be connected to a television for output, or in the case of the PET had a built-in display.

A Commodore PET, 1977, with built-in video display.

The home computer revolution was basically enabled by the development of Very Large-Scale Integration in the late 1970s. This superseded Large-Scale Integration. Instead of tens to hundreds of gates integrated onto a single chip, it became possible to put thousands of gates on a single chip, or the equivalent increase in transistors. Instead of a processor being distributed across thousands of individual small-scale integration logic chips, simple but functional processors could suddenly be implemented on just a single chip. This dramatically lowered the cost of computation.

But, the same thing also happened, more or less, with video controllers. You simply take all of that so-called non-computational hardwired logic built into a video teletype terminal, and you Very Large-Scale Integrate all of its components into a single chip. A dedicated video controller chip. And once you have a CPU on a single chip and a video controller on a single chip, all you need to do is put both chips on a single board with some RAM some ROM and some glue logic, and bingo-bango, you have an affordable small home computer.

A CPU like the 6502 is pretty slow, clocked around 1 Mhz. That, as we saw with some simple math earlier, is not fast enough to generate a real time video signal of any appreciable resolution. But what's worse is that if it were responsible for producing the video signal it would rob the machine of all its computation time. You'd need a CPU that was much more powerful to be able to generate both a video signal and run the program at the same time. This was actually the same problem that big computers had. But they solved it by offloading the video signal production to custom hardware, and that's exactly what a home computer's video chip does.

Here's an interesting intermediate technology. The Atari 2600.

An Atari 2600, also from 1977. (Image compliments of Atari.io)

Even when you have a video chip, that chip needs to have a source of data from which to continually produce the next frame of video. Which is totally unlike the live streaming nature of an analog television broadcast, where each new frame is received afresh from the airwaves. But in the those days RAM was still very expensive. An Atari 2600 came with only 128 bytes of RAM. An 8th of 1 kilobyte. Atari 2600 programs run primarily from between 4 and 32K of ROM, but ROM doesn't change, so it can't be used directly as the source for the video display.

Instead this machine uses a kind of hybrid model. It has a video chip (which is also responsible for generating sound) called the TIA, for Television Interface Adaptor. The chip has registers that control the positions and colors of a few objects and half of a background. But here's the crazy thing, the TIA doesn't read data directly from memory. Instead, its registers hold enough information to draw a single scan line of the total output. During the time when the raster beam finishes at the right side of the display area and returns to the left side of the next line, the CPU has to change the registers of the TIA to prepare it for the next scan line.

Although this technique makes programming the Atari 2600 very complicated, it also allows for a resolution of 40 by 192. If you used just one bit for each of those pixels, you would still need at least 960 bytes to represent them all. This is an example then of a machine that uses a hybrid model, where the CPU is intimately involved in generating the complete video output, but not in the production of every individual pixel. The CPU is free to run the program logic while the TIA chip draws one scan line. Then the CPU becomes occupied modifying the video chip's registers, and then it gets another scan line of free time to run the program again. It carries on like this to draw one complete frame. The CPU is then also responsible for telling the TIA when to generate the vertical sync signal to start the next frame.

What is the VIC-II and what does it do?

Finally we come to the VIC-II. This was clearly not MOS technologies' first video controller, but I think we have a good enough idea about what they do that we can skip to the video controller found in the C64 (and later in the C128.)

A later model PAL VIC-II chip by Commodore Semiconductor Group.

As I'm sure you can guess by now, the VIC-II is responsible for generating the video output signal. It is a lot more sophisticated than the Atari 2600's TIA however. The VIC-II can produce an image with a resolution of 320 by 200 pixels without any support from the CPU. This is a huge boon to programmers. The CPU can chug along executing the user's program, and the VIC-II runs along at the same time and keeps the display perfectly stable, all by itself.

So how exactly does the VIC-II accomplish this?The first thing to wonder is, if the C64 uses a 6502 (actually the 6510, a subtle variant) that is running at just 1 Mhz, then how is it able to generate such a high resolution video output? 320 horizontal pixels maybe doesn't sound like much, but if we remember our NTSC specifications, 525 lines, times 30 frames a second is 15,750 lines per second, times 320 dots per line is over 5 million signal variations per second. The VIC-II also generates output in color and has to draw in the border too, so it must actually be faster than 5 Mhz.

The answer to this mystery is that while the 6510 runs at 1 Mhz, the actual physical clocking crystal in a C64 is 14.31818 Mhz on an NTSC machine, or 17.734472 Mhz on a PAL machine. This speed is divided by 14 on an NTSC machine to result in a frequency of just over 1 Mhz, and is divided by 18 on a PAL machine for a frequency of just under 1 Mhz. How exactly the crystal's frequency is divided and how the VIC-II and 6510 are synchronized is a bit complicated and I'm still trying to work out all the details. But I think I'll write a technical deep dive on that fun little part of the C64 when I get it sorted out.

For now, it is enough to say that the VIC-II receives two clock signals, the color clock, which comes straight from the crystal frequency, plus a "dot clock." The dot clock is, just as it sounds, the rate at which individual dots (or pixels) are output. The dot clock is 8.1818 Mhz. The schematic I have doesn't say, but I believe that is the rate specifically for NTSC. Here's how the math works out: (Again, this is NTSC.)

14.31818 Mhz (crystal frequency)

÷14 (divider logic)

--------------

1.022727 Mhz (6510 clock frequency)

8.1818 Mhz (VIC-II dot clock)

÷ 1.022727 Mhz (6510 clock frequency)

--------------

8 (dots per 6510 clock cycle)

Based upon the above math, we see that the VIC-II draws exactly 8 pixels2 (horizontally, from left to right, because that's how the raster beam scans), in the duration of 1 CPU clock cycle. It is not a coincidence that the ratio of 8 pixel to 1 clock cycle is the same as the ratio of 8 bits in 1 byte. We will return to this shortly.

How does the VIC-II build an image?We talked about how the analog radio signal comes in from the airwaves and drives the raster scanning beam of a television set. But if a device like the VIC-II is digitally generating the video signal, it has to know what pixel to produce in real time as each pixel is being drawn. The data is in RAM somewhere, but somehow the VIC-II has to collate the various pieces of information from the right places at the right time for each pixel. First, what are those pieces, and second how does it find them?

For the sake of simplicity let's just stay focused on the VIC-II's text mode. A character is an 8x8 pixel square, and 40 characters are placed side by side in every text row. But as the raster beam travels across the screen one scan line at a time, it draws the first pixel row of all 40 characters before moving down a scan line, and then it sweeps across and draws the second pixel row across all 40 characters, then the third pixel row across all 40 characters, and so on.

One pixel row of one character is 8 pixels, or 8 bits, or 1 byte. But the actual pattern of bits for a given character is found in the character set. So, the parts that need to be collated at any given time are: the character being drawn, the character set bitmap, and the background and foreground colors.

In order to know where to fetch this data though, it has to know a few other things. The most important thing to know is what raster line it is on. If you think back to the TIA chip in the Atari 2600, it doesn't need to know what raster line it's on. All it does is draws the current raster line turning certain colors on and off based on the positions and colors of its built-in objects, which are all stored in its registers. For the Atari 2600, it is the job of the CPU to keep track of what raster line is being output, and how to change the TIA's registers on each successive raster line to build up the full frame. But the VIC-II can draw an image completely independently of the CPU. So the VIC-II needs to know what raster line it's on, so it can know where in memory to fetch data from.

The C64 Programmer's Reference Guide has this table in Appendix N, pages 454 and 455. It's a register map of all the VIC-II's registers. When I first saw this, I thought to myself, "What the hell am I looking at?" Do not despair, it's not as complicated as it first appears. The VIC-II has 47 registers, and they are listed in numeric order down the left hand column. (In decimal and in hexadecimal). The rightmost column is a textual description of the register. It's all the middle stuff that's confusing.

Most of the registers are 8-bits wide, so there are 8 columns for data bits 0 through 7. Each bit of every register is assigned a unique label. Most of the labels are based on the description of the register plus a number. But there are some exceptions for registers where each bit has a special purpose. Having a label for each bit is useful because the VIC-II combines bits from multiple places to build up the address whence it will retrieve data.

Table of VIC-II registers, from Appendix N of C64 Programmer's Reference Guide

Let's ignore most of these for now, and notice that register 18 is called the raster register. The raster register is a number that the VIC-II continually ticks up as it draws out scan line after scan line. Although the C64's vertical resolution is only 200 pixels, there are also borders at the top and bottom. And with tricks these get opened up by demo programmers, and sprites can get drawn into that area. So we know the VIC-II is actually tracking and drawing more raster lines than just 200.

This is a fuzzy area for me, because I haven't been able to track down a solid source of information for NTSC machines. According to the article, Screen dimensions from a raster beam perspective, at dustlayer.com, an NTSC machine draws 262 lines and a PAL machine draws 312. These numbers come from halving the number of interlaced lines in the NTSC and PAL standards. The standard NTSC frame is composed of two interlaced fields, totalling to 525 lines. However, video adapter chips on home computers usually generate a simpler output. The NTSC VIC-II generates 60 non-interlaced frames per second. If you imagine it from the perspective of the TV set, it's as though both fields are drawing the same data. Alternatively, you could say the frame rate is twice as high, but each frame has only half as many lines. So NTSC's 525 lines at 30 frames per second become 262 lines at 60 frames per second. And PAL's 625 lines at 25 frames per second become 312 lines at 50 frames per second. This is what the dustlayer.com article indicates, but then it only goes into the details on the dimensions of a PAL screen.

The part that becomes fuzzy to me is that on a PAL machine (at least) the VIC-II draws 49 lines in the top border, before getting to the first line of the bitmapped area. Then it draws 200 lines of that bitmapped area, and following that 63 lines are drawn as the bottom border. The C64 Programmers Reference Guide that I have goes into detail on the NTSC VIC-II. It too only starts the visible bitmap area at raster line 50, and draws 200 lines of bitmapped area. I have confirmed this on my own NTSC C64s. For example, setting a sprite's top position to raster line 50 causes it to appear flush with the top border. What doesn't make sense to me is how many lines the bottom border gets if it starts at raster line 250. On a PAL machine this leaves a comfortable 63 raster lines to draw the bottom border, but on an NTSC machine, if there are only 262 raster lines total, and the bottom border starts at 250, this puts 49 in the top border but only 11 lines in the bottom border. This doesn't feel like enough, or, it seems like it should cause the visible area on an NTSC machine to sit closer to the bottom of the screen than to the top. The programmer's reference guide doesn't go into detail on exactly how many raster lines each machine is actually drawing. If someone knows and wants to clear up this mystery for me, please let us know in the comments and I'll update this post.

Regardless of the answer to the above mystery, perhaps NTSC machines only start outputting real raster lines when the VIC-II's raster counter is greater than zero, or perhaps the C64 violates the NTSC standard and somehow is able to output more than 262 lines (and maybe dustlayer.com is just mistaken on this point), I don't know. But one thing is certain: the number of raster lines drawn is greater than the range of a single 8-bit register. To handle this the VIC-II uses bit 7 of register 17 as a 9th bit for tracking the current raster line. There are thus 9 raster register bits, labeled R0 to R8. The important point is that the raster register continually counts up until it reaches the last line and is then rolled back to zero.

How does the VIC-II fetch data?While the VIC-II is drawing the top and bottom borders it's pretty straightforward. The "pixels," so to speak, are all on and the color comes from the value stored in register 32, the "exterior color" register. The border, thus, is drawn much like how the TIA in the Atari 2600 draws everything. No main memory has to be accessed because the data is already present in the VIC-II's own registers.3 The VIC-II knows that it's in the top or bottom border by means of the raster register.

Now what happens when it hits the first line of the visible area, the bitmapped area? This part is really cool. The VIC-II typically fetches 1 byte from memory per CPU clock cycle. Remember that the dot clock is exactly 8 times faster than the CPU cycle time. And that a byte contains 8 bits, which is enough information for the VIC-II to draw 8 pixels, one pixel per dot clock cycle. Recall how I said that an analog TV broadcast signal is live? Well, you can see here just how live the VIC-II's signal production is. It is an incredibly tightly timed operation. The VIC-II reads 1 byte from memory and outputs that one byte's bits immediately at 8 Mhz, just in time for the next CPU clock cycle allowing it to fetch the next byte, which it immediately draws out and so on. It does this super tight read-render-read-render-read-render in a 40-byte burst, for the 40 columns across the screen. Then it's into the right border then wraps to the left border and then starts the 40-byte tight cycle for the bitmapped segment of the next raster line.

So where does it actually get that byte from? For this we need the register labels.

Above we have two of the address types that the VIC-II generates. We'll focus on the top one first. Character data address.

Note that the VIC-II only contributes 14-bits to the main memory address bus, A0 to A13. It controls the least significant 14 bits, and the most significant two bits come from CIA 2. Thus a register in CIA 2 determines which of the C64's four 16K banks the VIC-II sees. The VIC-II generates the low 14 bits according to the labels shown in the character data address above. Let's see how the bit usage breaks down.

A character set (either in ROM or in RAM) is a bitmap that's 2K big. Each character is 8x8 pixels, or 8 bytes with 8 pixels each. And 256 characters times 8 bytes per character is 2048 bytes or 2K.

To address a full 2 kilobytes you need 11 bits. That is: 2 ^ 11 = 2048. The 2K character set can be found at any of 8 different offsets within the 16K bank, because 16 / 2 = 8. To specify 8 possible offsets you need 3 bits, 2 ^ 3 = 8. So in the the VIC-II's 14-bit address, the 3 most significant bits are called the character base, because they specify the base offset for where the character set is found. These bits are labeled CB11, CB12, and CB13, and come from the lower nybble of register 24. The remaining 11 bits then are used as the offset into the character set.

A character set bitmap is laid out just one character wide. The first 8 bytes are the eight rows of the first character. The next 8 bytes are the eight rows of the second character and so on. Thus to find the offset into the character set we need to know which character is being rendered. Each character's bitmap starts at (CharacterBase + Screencode * 8). This is interesting. The screencode that's in screen matrix memory is used directly as an index into the character set bitmap. This feels like another example of 8-bit efficiency, there is no abstraction layer at all. Address bits 3 through 10 are the 8 bits of the character's screencode. The screencode's 8 bits are shifted left 3 times. In all place-based number systems a left shift multiplies the number by the base. In decimal, a left shift multiplies by 10. In binary, a left shift multiplies by 2. Three left shifts, therefore, is x2x2x2 or times 8.

The byte value in screen memory is used by the hardware of the video chip as an index into memory. Very cool.

The first four characters in the byte order of a character set bitmap. (See: Commodore 64 Screen Codes)Lastly, it needs to know what raster line is currently being drawn, and uses that as a further offset into the character set bitmap, to find the specific bit row of the character glyph. So, the last 3 address bits are taken from the low 3 bits of the raster register. 2 ^ 3 = 8, for the 8 bytes of the character bitmap. This is really cool, each time the VIC-II needs to read the next byte to render, it does so by composing an address from multiple sources.

Let's look at those bits and their sources again:

| Address Bit | Source | Source Bit | Label |

|---|---|---|---|

| 15 | CIA 2 Port A | bit 1 | — |

| 14 | CIA 2 Port A | bit 0 | — |

| 13 | Character base 13, VIC-II register 24 | bit 3 | CB13 |

| 12 | Character base 12, VIC-II register 24 | bit 2 | CB12 |

| 11 | Character base 11, VIC-II register 24 | bit 1 | CB11 |

| 10 | Current screencode | bit 7 | D7 |

| 9 | Current screencode | bit 6 | D6 |

| 8 | Current screencode | bit 5 | D5 |

| 7 | Current screencode | bit 4 | D4 |

| 6 | Current screencode | bit 3 | D3 |

| 5 | Current screencode | bit 2 | D2 |

| 4 | Current screencode | bit 1 | D1 |

| 3 | Current screencode | bit 0 | D0 |

| 2 | Raster Counter, VIC-II register 18 | bit 2 | RC2 |

| 1 | Raster Counter, VIC-II register 18 | bit 1 | RC1 |

| 0 | Raster Counter, VIC-II register 18 | bit 0 | RC0 |

This address is composed in real time, changed once per CPU clock cycle to get the next byte to display. But you'll notice that it isn't simply incrementing. The least significant bits are only incrementing when the raster line changes, but that only happens after it's read in 40 different bytes to draw the 40 columns across that raster line. Rather, the values that are changing on every cycle are the 8-bit screencode.

This brings us to the next VIC-II generated address. The character pointer address.

As we have just seen above, the VIC-II uses the current screencode as a component of the address it uses to lookup the bit pattern from the character set bitmap. Clearly, it needs to also be able to read the screencode from screen matrix memory. First we'll look at that, and then see where it leads us.

The same CIA 2 bits provide the VIC-II's bank for everything the VIC-II does. So whatever 16K bank you're using, that's the same bank whence it will read both the character set bitmap and screen matrix memory. Screen matrix memory is a bit different though. It's 1000 sequential bytes. The default location for screen memory is from 1024 to 2023 ($0400 to $07E7), the first thousand bytes of the 1K block from 1024 to 2048. How does the VIC-II find it starting at 1024? For that, we look to how the VIC-II composes the character pointer address.

Earlier image, repeated here for easy reference.

After CIA 2's bank bits, the next most significant 4 bits are also from the VIC-II register 24. These are four video matrix bits, VM10, VM11, VM12 and VM13. 2 ^ 4 = 16, allowing the 1K block of screen matrix memory to be found at any of 16 possible 1K offsets into the 16K bank. Makes sense. Screen matrix memory is half the size of a character set, so there are twice as many possible places in the 16K bank where it can sit. Technically, you can set the upper 4 bits of register 24 to the same as the lower 4 bits, and the VIC-II will use half of the character set bitmap data as though they were screencodes in screen matrix memory! I don't know why you would want to do that, but it's as easy as:

POKE 53272,69 ... for the first half of the character set, or POKE 53272,85 ... for the second halfThe reason you see vertical sequences of "@" every 8th column for the first so many rows is because most of the characters in the early part of the set have a blank last row. The blank is 0, all bits off. And 0 is the screencode for "@".

The least significant 10 bits are used as an index into screen matrix memory. You need at least 10 bits to address 1000 bytes. 2 ^ 9 = 512, that's not enough. 2 ^ 10 = 1024, that's just a hair more than enough, so it needs 10 bits. These register bits are labeled VC0 to VC9, but you'll notice that they are not found in the table of the VIC-II's registers, above. That's because VC (which I suppose stands for Video Count) is an entirely internal register. The CPU cannot read it by addressing some register of the VIC-II. But we can imagine how it works. It increments once to address each subsequent screencode.

We have a problem though. If you're familiar with programming the C64 you already know what the problem is, and if you're an astute reader you may have already figured out what it is.

The dot clock is exactly 8 times faster than the CPU clock. Memory can only be accessed at twice the rate of the CPU clock. Typically the CPU and the VIC-II share memory accesses. The VIC-II reads memory during the first (low) phase of the CPU's clock cycle, and the CPU reads memory during the second (high) phase. In a given CPU cycle, the VIC-II reads one byte, the character data byte from the character set bitmap. Then it draws it. Repeat. But it also needs to know what the screencode from screen matrix memory is, because it uses it in the address to get the character data. But, the read-render-read-render cycle is so tight, there literally isn't any time to also fetch the screencode. So, how does it do this?

The side borders

In an analog TV signal, the number of raster lines is discrete, there are 525 in NTSC (interlaced), or 625 in PAL (interlaced.) But, the horizontal resolution is purely analog. It is not discretely divided into pixels. As the analog video camera sucks in light it is directly transmuted into the analog video signal. And when it hits your analog TV set it continuously varies the electron beam as it sweeps along the raster line. In other words, on a CRT display, vertical resolution is digital, but horizontal resolution is analog.

The computer of course, being a digital computational machine, introduces a discretization to the horizontal sweep. The VIC-II divides the horizontal sweep into 504 pixels. Although not all of these pixels actually get drawn to the screen, because this also includes the length of time it takes for the scanning beam to leave the right edge of the screen and return to the left edge of the screen. This period is called horizontal blank, because the electron beam has to be momentarily turned off so it doesn't blast a jet of electrons across the phosphor screen as it is realigned from the right edge back over to the left edge.

Nonetheless, if we divide the 504 horizontal pixels by 8, (because the dot clock is 8 times faster than the CPU,) we get 504 / 8 = 63. This means that exactly 63 CPU clock cycles occur while the VIC-II draws one scan line. But, the bitmapped area of the display is only 320 pixels wide. 504 - 320 = 184. So 184 pixels per scan line are actually being drawn split between the left and right borders. 184 / 8 = 23. So 23 CPU cycles are spent in the borders. But, just as we discussed for the top and bottom borders, the VIC-II doesn't need to read out of main memory to draw a border. The data is already there, in its "exterior color," register 32. So, what this means is that it could use border time to read other data it needs.

And it does do this. Unfortunately, the border, thick and convenient as it is, is still not enough time to read in all the character pointers, (the screencodes from matrix memory,) that are necessary for rendering the upcoming scanlines. Why not? Even if it used the full amount of time in both the right and left borders, that would only be 23 cycles. Only 23 bytes could be read but it needs at least 40 screencodes. In reality though it needs even more time because it also may need to read in sprites and sprite pointers on every raster line. I am not going to go into sprites though, as they add complication and are outside the scope of this post.

The question is then, where does it find the time to fetch all the screencodes? The answer is what is known infamously as the bad lines. The VIC-II signals the CPU (there is a wire that connects the AEC pin, Address Enable Control, on the VIC-II chip to a corresponding AEC pin on the CPU) that tells the CPU to remove itself from the address bus. This is asserted every cycle allowing the VIC-II and CPU to take turns accessing the buses. There is an additional wire connecting the BA (Bus Available? Bus Access?) pin on the VIC-II to the RDY (Ready) pin on the CPU that tells the CPU to halt processing. After asserting this halt signal, the VIC-II waits 3 CPU clock cycles, during which the two continue taking turns, to give the CPU time to finish any operation it may be in the midst of. Then, with the CPU halted and safely off the bus, the VIC-II can use both clock phases for itself and can make 2 memory accesses per cycle. It halts the CPU for at least 40 cycles.

Remember, when the VIC-II is drawing scan lines it combines the screencode with the least significant 3 raster count bits. For one set of raster line bits it cycles through 40 screencodes. But when the raster count increments by one, it draws the second raster row of the same set of 40 screencodes. Thus, it is able to draw 8 scan lines before it needs to fetch a new set of 40 screencodes. So the CPU halting only happens on every 8th scan line of the bitmapped area of the screen. These lines are the so-called bad lines.

Now, I'm not 100% sure of this, because the VIC-II is a complex beast, and I'm still quite new at this, but it seems to me that the halting only occurs at the start of the raster line. That is, the VIC-II draws out the right border of the last scan line of a text row while the CPU is still running. Then the raster register increments and matches a pattern (I'm speculating about internal implementational details here) that identifies that it's at the start of a new text row, and this triggers halting the CPU and reading in new screencodes.

The screencodes are read into 40 internal VIC-II registers. When composing a character data address the screencode value is being sourced from its internal copy of that data.

Earlier we saw that 23 cycles are spent within the right and left borders. Let's imagine then that 12 cycles are spent in the right border. That leaves 11 cycles in the left border before it hits the bitmapped area and has to start drawing pixels from memory. But at least 3 of those cycles have to be shared with the CPU waiting for it to free up the bus. That leaves 8 cycles where the VIC-II can read 2 bytes per cycle plus 3 cycles when it can read 1 byte per cycle allowing it to read in, let's say, 19 bytes. Surely this means it has to start drawing out the first characters before it has finished reading in all 40 screencodes. But while it is drawing the first 20 characters or so the CPU is still halted. This means it can use one CPU clock phase to grab the character bitmap data for a screencode it's already cached, and use the second clock phase to grab another screencode. At this rate it has enough time to grab all of the screencodes before it has to draw out the complete line.

I'm not sure exactly where it finds time in all of this to also grab the sprite data though. Maybe it grabs the sprite pointers during the 12 cycles spent drawing the right border? Now I'm way out on a limb. I really don't know. What I do know is that the CPU halting does not seem to happen while drawing out the right border, which is what we're about to talk about.

Implementing Splitscreen

It may seem crazy to you that I've just spent ~10,000 words talking about analog TV signals and then deep internal details about how the VIC-II manages to fetch data from memory in order to digitally generate that video signal, before finally coming to the part where we talk about splitscreen. But, it was necessary. We need to understand, at least to the degree that we do now, what is going on inside the VIC-II and the CPU's bad lines and why they exist, before we can tackle something like implementing a stable splitscreen.

The theory of how the splitscreen works should now be fairly straightforward to understand. A pair of VIC-II registers, 17 and 22, provide special bits that can be used to change the mode that the VIC-II draws in. We'll return to talk about hires bitmap mode at the end. But by now, it should be clear that whatever mode the VIC-II is in, it needs to be able to retrieve data from memory in real time as it's drawing the raster lines within the bitmapped area, such that it knows what pixels to draw out at that moment. Rather than being a completely mysterious process, we can now assume that a key difference between modes is the manner in which the VIC-II composes the data fetching addresses.

The idea of splitscreen then is quite simple. We just change the mode bits of the VIC-II while it's in the middle of rendering a frame, and then change it back at the end of the frame. Nothing to it, right?

Well, there are always some gotchas.

The very next pixel the VIC-II draws out, will be drawn according to the new mode's interpretation of that data. And the very next memory fetch will use the address composition scheme of the new mode. It will pick up exactly where the previous memory fetch left off, though, because all the other internal counting registers are incrementing as normal. For example, the VC0 through VC10 counter doesn't get modified just because the VIC-II's mode is changed. Those bits represent where the raster beam is in screen matrix memory regardless of mode. And the raster counter is similarly unaffected by a change of mode, because, it simply reflects whatever is the current raster line being drawn.

So what would happen if you just changed modes from say hires text mode to hires bitmap mode while the raster beam was in the middle of the screen? Essentially, from that point on in the raster line it would stop drawing text characters, and start reading in and drawing hires bitmap data. But the screencodes for that row of text would already have been cached, and they would start being interpreted as colors for the bitmap mode. If the only thing you were doing was a permanent switch from text mode to bitmap mode, it wouldn't be so bad. It might not look like the cleanest transition in the history of the Commodore 64, but it would only last for a 60th of a second before being overwritten by the full bitmap in the next frame.

In splitscreen though, the idea is that you have text in the top half and bitmap in the bottom half (or vice versa, or some other combination of modes) and that this divide between the two halves persists frame after frame. In this case if the mode change were happening in the middle of a raster line, every frame, you'd see a terribly ugly, distracting and probably jittering visual glitch. When the VIC-II is drawing in the border, though, a change in mode doesn't affect the border's color or appearence. When the VIC-II then starts drawing the bitmapped area on the next raster line, it would cleanly be in the new mode.

So the goal then is to change the mode while the raster beam is drawing in the border.

It can't just be any raster line though. We've already seen a bit of how the VIC-II draws its text mode. Every 8th raster line is the start of a text row. It pre-fetches the screencodes and stores them internally. Then it uses those screencodes as indexes into the character set bitmap for the next 8 raster lines. In bitmap mode, though, it still operates on 8x8 pixel cells. At the start of a new row it pre-fetches screencodes, but a "screencode" in bitmap mode is used for the colors of that cell. (We'll return to this topic at the very end of this post.) This means that even if you change mode while in a border, if you are in the middle, vertically, of an 8 pixel high row of cells then the screencodes that got cached while in text mode will continue to be used—but interpreted differently—in the remaining raster lines of this cell row.

For this reason it basically never makes sense to split the screen halfway through a cell row. The only way it could make sense is if the text characters in the split row also happened to be the right bit patterns to represent the colors you wanted in the lower half of that split row in the bitmap image. That's just not going to happen. Maybe some demo coding artist could fit these two together, like the folding back cover of a Mad magazine. But in the context of an operating system where both sides of the content are being dynamically generated from disparate sources, it's impossible. The split has to be made between two cell rows.

Now we know when to make the mode changes and why. This is where the fun begins.

The beam is racing along, drawing out the frames, 60 times a second (or 50 times a second in PAL). And we need to make a mode change while the raster beam is inside the side border on the last raster line of a cell row. If we make the mode change too early, before the raster beam enters the border, we're going to see a jittering glitch in the last cell or two of the last raster line of that cell. Not horrible, but not awesome. On the other hand, if we're too late, well, let's think about this. We're trying to do this on the last raster line of the cell row. That means we're mere cycles away from the start of the first raster line of the next cell row, but that's the raster line on which the VIC-II needs to halt the CPU to fetch all the new screencodes. That's a bad line.

If we are just one cycle too late, the VIC-II will halt the CPU before we're finished making the change. And by the time it wakes the CPU back up, it will already have drawn out a half a raster line or so in the wrong mode. And depending on what we were able to change in time it may have fetched and cached the wrong screencodes!

The change has to be made while the raster beam is in the righthand border of the last raster line of the cell row. If it makes it to the lefthand border of the next raster line down, it's too late. By my reckoning, this means we have a window of exactly 12 CPU cycles. That's very tight. Especially when you consider that an absolute write (the kind necessary to write to a VIC-II register) takes 4 CPU cycles.

The Raster Interrupt

Pulling off a splitscreen on the C64—at all—is really only possible because the VIC-II can produce a raster interrupt.

The raster interrupt is not that complicated. The raster register is both read and write. When you read it, it tells you on what line the raster beam is currently drawing. But if you write to it the VIC-II latches the written value into an another internal register. Every time the raster register is incremented, it is compared to the number last written. If the two numbers match, an interrupt is generated. Generating an interrupt is as simple as the VIC-II lowering its IRQ pin, which is electrically connected to the CPU's IRQ pin.

There is more than one graphics-related event that can cause an interrupt. However, the VIC-II has two features to deal with this. First, the different types of interrupts can be enabled by setting the appropriate flags in the enable interrupt register (26). So even though you've written a value to the raster register, you can tell the VIC-II to stop generating an interrupt even when the raster counter hits that number. Additionally, the VIC-II sets flags in the interrupt register (25) to indicate what kind of event caused an interrupt.

The C64's interrupts are quite simple. There is no hardware interrupt controller. Even in the early days of computing, while the CPU only had a single IRQ and a single NMI line, more expensive systems included a hardware interrupt controller. An interrupt controller provides multiple separate interrupt lines which different devices can be configured to trigger. The hardware controller would then trigger the CPUs single IRQ line, but could prioritize simultaneous interrupts by telling the CPU which device triggered the interrupt, and allowing the CPU to run a custom service routine per device. The C64 has no such intermediate hardware interrupt controller. Instead, multiple devices are just hardwired to the CPU's IRQ or NMI lines. Devices wired to the IRQ line include the VIC-II, CIA 1, and potentially one or more devices via the expansion port. When an IRQ is triggered the software has to poll the various devices checking to see who has an IRQ flag set and for what reason.

Supposing then that we are only interested in creating a split between cell rows, we remember that the first raster line of the first cell row is 50. The first raster line of the second cell row is 58 and so on. Like this:

| Screen Row | Raster line |

|---|---|

| 0 | 50 |

| 1 | 58 |

| 2 | 66 |

| 3 | 74 |

| 4 | 82 |

| 5 | 90 |

| 6 | 98 |

| 7 | 106 |

| 8 | 114 |

| … | … |

| 23 | 234 |

| 24 | 242 |

If we wanted a new mode to start on the first line of cell row 7, we could set the raster register to 106 and enable raster interrupts. The problem is that this is the bad line. The CPU would hop into the interrupt service routine, but then it would also get halted and as we saw earlier it's too late to make a change.

Instead, we want to set the raster interrupt to one raster line before where the split shall occur. If we want to change mode starting on cell row 7, we can set the raster interrupt to occur on line 105. The interrupt then occurs at the very start of the last raster line of the cell row before the mode switch will take place. At this point we know we have exactly 63 cycles before the start of the next line, the bad line. We also know that we only have the final 12 cycles in which to actually make the mode change. 63 - 12 = 51, so, we have just 51 cycles to do all the preparatory work and calculations necessary to switch into the mode. This is not much time at all.

If you're wondering if there is some trick to knowing when you're within the magic 12-cycle window, there isn't really any trick. You just count and add up all the cycles of all the instructions you're using. This is why any good documentation of the 6502's instruction set includes the execution time. You can use the C64OS.com reference post 6502 / 6510 Instruction Set as a guide. An Absolute LDA for example takes 4 cycles.

Careful Timing

C64 OS is perhaps special, maybe even ambitious, in its requirements. I wanted the location of the split to be moveable in real time, by dragging the status bar up and down with the mouse. But C64 OS also allows the application to map the KERNAL in and out at its leisure.

If the KERNAL is mapped in, and an interrupt occurs, the CPU will jump through the KERNAL's defined vector, through the KERNAL's handler and into a routine pointed to by the workspace memory variables $0314/$0315. The KERNAL's handler takes exactly 29 cycles. In order to keep the math workable, C64 OS implements a nearly identical version of the KERNAL's interrupt handler that is pointed to by the RAM under the KERNAL. So, if the KERNAL is patched out when the interrupt occurs it jumps through the RAM vectors that point to the C64 OS version of the handler, which also takes exactly 29 cycles.

The problem is that they can't both call identical routines. The RAM based routine, when the KERNAL is patched out, has to backup the state of the memory map (the value at $01) and patch in at least I/O. But the ROM based routine, when the KERNAL is patched in, needs to leave the memory mapping alone. There are two modes that could lead you to get routed through the RAM based handler:

- You're in ALL RAM mode, or

- You're in RAM and I/O only mode

Technically if you're in RAM and I/O mode you don't need to patch in I/O, but believe it or not it takes more code and time to check if I/O is already patched in, and you actually don't need to care. If you come via the RAM routine, you can just INC $01 to guarantee that I/O will get patched in. If you're curious to know why, then I highly recommend reading my earlier post, The 6510 Processor Port. Many people gave me positive feedback about how helpful this was for understanding how the Processor Port is used to manage the C64's memory mapping.

C64 OS is not open source, but I'm sharing this snippet of code here, because I'm quite fond of it. Here is the routine I'm using to service the raster interrupt to split the screen.

Let's do a breakdown of how this works. vic_rom and vic_ram are two alternative start points. vic_rom is pointed to by the vector at $0314/$0315, so the KERNAL's ROM routine will run it. vic_ram is pointed to by the vector at $0312/$0313, (which is custom to C64 OS) so that C64 OS's nearly identical in-RAM IRQ handler will run this. The comments indicate that both the ROM and the RAM IRQ handler routines are exactly 29 cycles.

The vic_rom routine uses a NOP to kill 2 cycles, then it reads $01 into the accumulator and uses a BNE to skip into the shared part of the routine. The BNE is always taken because we know that the value at $01 is not zero, because we got here via the KERNAL's handler. Ergo the KERNAL must be patched in and $01 must be >0.

The vic_ram routine loads the current value of $01 into the accumulator, and then it increments $01 (whether necessary or not) to guarantee that I/O is now available. Then it simply falls through to the shared part of the routine.

Both of these start points use precisely 8 cycles, and result in I/O being mapped in and the accumulator holding the memory map that should be restored at the end. That's pretty damn sweet! No matter which way we got here, we're in the same state having used the same number of cycles. 37 cycles to be precise. So far so good.

Now, some things about the C64 OS built-in splitscreen are being taken for granted here. For example, text mode is always on the top, and therefore bitmap mode is always on the bottom. Additionally, other code is responsible for knowing where the split should occur and for copying color data from a color data buffer into the screen matrix memory below the split. All we have to do here is change modes.

We push the accumulator to the stack so the memory mapping can be restored later. And then load the X and Y registers with two values necessary to turn on bitmap mode and then change the memory pointers to where to find the bitmap data. Also in C64 OS, bitmap data is always stored beneath the KERNAL ROM. So these values are static. All of this, up to now has taken us 44 cycles. We then write the two mode values to the VIC-II's registers, which finish at cycle 48 and and 52 respectively. I was worried that cycle 48 might be a hair too soon, but I was only guessing about exactly how many cycles are in the left border and how many are in the right border. From my experience, writing the bitmap mode change (register 17) ending at cycle 48 does not cause any visual glitches. And finishing the memory pointers write at cycle 52 is still well within the limit of 63.

Besides the bitmap flag in register 17 and the memory pointers in register 24, there is also the multicolor mode flag in register 22. Note that all of this IRQ service code is implemented in the C64 OS service KERNAL module, and it is also the service module that provides a routine for configuring C64 OS's graphics context. When the graphics context is set, the byte in the routine above at the mcmode label is written. The read is an immediate mode read, because that's fastest. So, the splitscreen servicing doesn't actually look up or compute this byte, it has its argument modified ahead of time. All it has to do is load immediate and write to the VIC-II's register 22.

As soon as the mode is changed, you can breathe a giant breath of relief. The race against the raster beam is over. The IRQ service routine is still running, it can do more things, but you are no longer under critical timing constraints.

The first thing we do is change both vectors (for RAM and ROM handlers) to the c64os_service routine. That one is the standard routine, the one that services the mouse and keyboard and updates the timers and blinks the cursor etc. Next, we reset the the VIC-II's raster register to 1, and reset the latch. Together this will cause the main IRQ service routine to run when the raster is at the very top, the top of the top border, of the next frame. Its first job will be to set the VIC-II's mode back to text. But, this is not so timing critical because it is all happening inside the top border. It's never too early, and doesn't need to worry at all about being too late.

The last step is to restore the memory map by writing the accumulator back to $01.4 And then the standard restoring of the registers before returning from the interrupt handler.

HiRes Bitmap Mode

I promised that I'd comment on HiRes Bitmap mode. So let's finish up by comparing the way text mode and bitmap mode differ.

Although text mode is more memory efficient than bitmap mode and as a consequence it is much faster to update the entire screen in text mode, in one critical way it is more complex than bitmap mode.

Text mode consists of several parts that come together. You have the 2K character set bitmap. You have the screen matrix memory, full of character pointers (otherwise known as screencodes.) Plus you have color memory, something we didn't touch on earlier. And you also have the background color register in the VIC-II itself.

The character pointers are an indirection. The VIC-II doesn't know exactly where it is going to get the data to render until it comes to a given text row. Then it reads in the character pointers and uses them at every turn to read in 1-Byte chunks of bitmap data from discontiguous addresses, and doing so consumes basically all of its memory access allowance. The question might be, where does it get its color data from? And how does it have time for those accesses?

The answer is that the VIC-II doesn't have just an 8-bit data bus, like everything else in the Commodore 64, but a 12-bit data bus. It has 4 data bus lines that connect it to the special 4-bit static color ram chip. Everytime it reads a byte of bitmap data out of the character set, it can simultaneously, on an independent bus, read a 4-bit color nybble. And that's the color it uses for the foreground of that cell.

In addition to the VIC-II's 8-Bit data bus, D0 to D7, it also has D8 to D11 connecting directly to the Color RAM (incorrectly labeled on the schematic as "ROM".)Now we know why text mode is limited to having one common background color for every cell, but a custom foreground color for each cell. Because, there is physically no time to fetch color data from main memory. There is only time to fetch color data—at all—because it's coming from an extra bus. But that color bus is only 4-bits wide, and the color ram is only 4-bits wide. So there is only space and time enough to fetch a single 16-color value per cell. And the other color just comes from an internal register.

Now we compare this with hires bitmap mode.

A bitmap takes up more memory. Instead of taking 2K plus a 1000 indexes into that 2K, you just have 8K of bitmap data. But there are no indexes. There is no discontinuity. From nothing more than the base address, it is as though the VIC-II knows in advance where in memory it will fetch every byte to be rendered. It does these fetches using exactly the same internal counters, the Video Counter (VC0 to VC9) and the Raster Counter (RC0 to RC2), but it uses them in a novel address composition scheme.

There is no need for fetching an index first, because this scheme is sufficient to address 8K of contiguous data. (If you think hard enough about how this address scheme works it also becomes apparent why C64 bitmap data is laid out with the "tile" structure it has.)

In this mode then, the VIC-II doesn't need the character pointers. However, all of the timing and logic has been worked out to allow it to fetch those character pointers, and the internal registers are already there to store them. If my programming instincts are worth anything, my guess is that it might even have been harder to prevent the chip from making these character pointer requests than just letting that logic run, even if those bytes weren't needed.

But of course, as it turns out, the character pointers are very useful. While the official color ram chip is only 4-bit, those unneeded character pointers are 8-bit. So, instead of ignoring the character pointers and using the color ram, it is the other way around. In hires bitmap mode, it is the color ram data that is ignored, and the character pointers are repurposed as color data. One nybble for a 16-color foreground, one nybble for a 16-color background, per cell.

Final Thoughts

I have heard the VIC-II described as a kind of special microprocessor. However, in my opinion, it is only a kind of processor in a very loose sense. It doesn't have an instruction set, for example. It doesn't perform operations. I think a much better analogy is to compare the VIC-II to an old school video terminal.

A video terminal was not a computer. But it did have memory, and it had hardwired logic circuitry that allowed it, completely independently of the computer, to continuously loop over that memory and generate a stable video output signal. And, compared to the computer, it did so at a relatively high frequency. That is almost exactly a description of the VIC-II.

The main difference being that the VIC-II's memory is shared with the CPU. Thus allowing the CPU to directly manipulate any part of the memory while the VIC-II continues to render it, completely unawares. The VIC-II is also more tightly integrated into the computer, with the ability to generate interrupts, and it's on the address and data bus such that the CPU can manipulate its registers in real time. Nonetheless, I like the analogy.

I started with a reference to Star Trek Deep Space Nine, and I'd like to finish with another.

The central behavior of the VIC-II is its continuous rolling of numerous internal counters. The Video Counter, the Raster Counter, and surely others we can't even see, such as to help it know where it is along a given raster line. Its memory sampling addresses are then composed by combining parts from these independently rotating wheels.

When the dots finally connected in my head about how it works, the first thing that came to mind was a scene in DS9. Rom, Quark's idiot savant brother, is reminding him how the mind of the most brilliant Ferengi alive works: "Wheels within wheels, brother," he says, tapping his temple. That's pretty much how the VIC-II works. Wheels within wheels.

Rom taps his temple and says to his brother Quark, 'Wheels within wheels, brother.'

I hope someone will find this post useful. But bear in mind, this is all very new to me. There are many areas of internal operation and implementation about which I am merely guessing. There also remain many foggy grey areas that I've yet to explore or understand.

If I've made any technical errors, and you know better than I how something I've described actually works, I would be overjoyed to be set straight. My goal in writing is to help me to clarify my own thoughts, and then to share the fruits of my efforts with you. But if I've made a mistake, then I want to know about it so that I can improve my own understanding, and I will update the relevant part of the text.

Happy Hallowe'en friends, and remember to keep enjoying your C64.

- The explanation for 30 vs. 25 frames per second is driven by the difference in the standard frequency of the AC power mains. In North America and in some other parts of the world AC power is 60Hz, into which 30 fps divides evenly as two interlaced fields. In Europe where the mains are 50Hz, 25 frames per second was the standard. [↩]

- It actually is ~7.99998, but even the 1.022727 is rounded slightly. So we can regard it as being 8 pixels per 6510 clock cycle. [↩]

- We're ignoring sprites here, for the moment, because they add an extra layer of complication. [↩]

- As I look at this code, I realize, I'm not really using the accumulator. I could change what resets the latch and raster register to the X or Y registers. I don't really need to pass the accumulator through the stack. I think I did that because at one point I was using A for something else. This can be improved by switching the PHA for a 3-cycle BIT ZP instruction. [↩]

Do you like what you see?

You've just read one of my high-quality, long-form, weblog posts, for free! First, thank you for your interest, it makes producing this content feel worthwhile. I love to hear your input and feedback in the forums below. And I do my best to answer every question.

I'm creating C64 OS and documenting my progress along the way, to give something to you and contribute to the Commodore community. Please consider purchasing one of the items I am currently offering or making a small donation, to help me continue to bring you updates, in-depth technical discussions and programming reference. Your generous support is greatly appreciated.

Greg Naçu — C64OS.com